Understanding Operating System Requirements for Docker Images: Do You Need to Specify OS for Every Docker Image?

In the dynamic landscape of modern software development and IT operations, Docker has emerged as an indispensable tool, revolutionizing how applications are built, shipped, and run. Its promise of agility, portability, and control has transformed the software supply chain, making it easier for development and operations teams to collaborate effectively. Yet, amidst the enthusiasm for containerization, a fundamental question frequently arises, particularly for IT administrators and developers new to the ecosystem: “Do I need to specify the operating system for every Docker image?”

This question touches upon the core architectural differences between traditional virtualization and containerization, delving into how Docker images are constructed, the role of the host operating system, and the practical implications for application deployment and management. Through this article, we’ll explore these nuances, providing clarity on Docker’s approach to OS dependencies and offering comprehensive insights into best practices for leveraging this powerful technology. For further exploration of these topics and more, Tophinhanhdep.com serves as a valuable resource for high-resolution images, photography insights, and visual design tools that can enhance documentation and presentations related to these technical concepts.

The Core Difference: Containers vs. Virtual Machines

At its heart, Docker is an open platform designed to streamline the lifecycle of applications. It enables IT operations and development teams to package an application with all its necessary components—libraries, system tools, code, and runtime—into a standardized unit called a Docker container. This standardization ensures that the application behaves consistently across any environment where a Docker Engine is installed, irrespective of the underlying infrastructure or programming language used.

However, to fully grasp the OS requirements for Docker images, it’s crucial to understand how Docker containers fundamentally differ from traditional Virtual Machines (VMs). This distinction clarifies why an explicit OS specification for every image isn’t always necessary in the way one might assume from a VM-centric perspective.

Docker’s Approach to Operating Systems

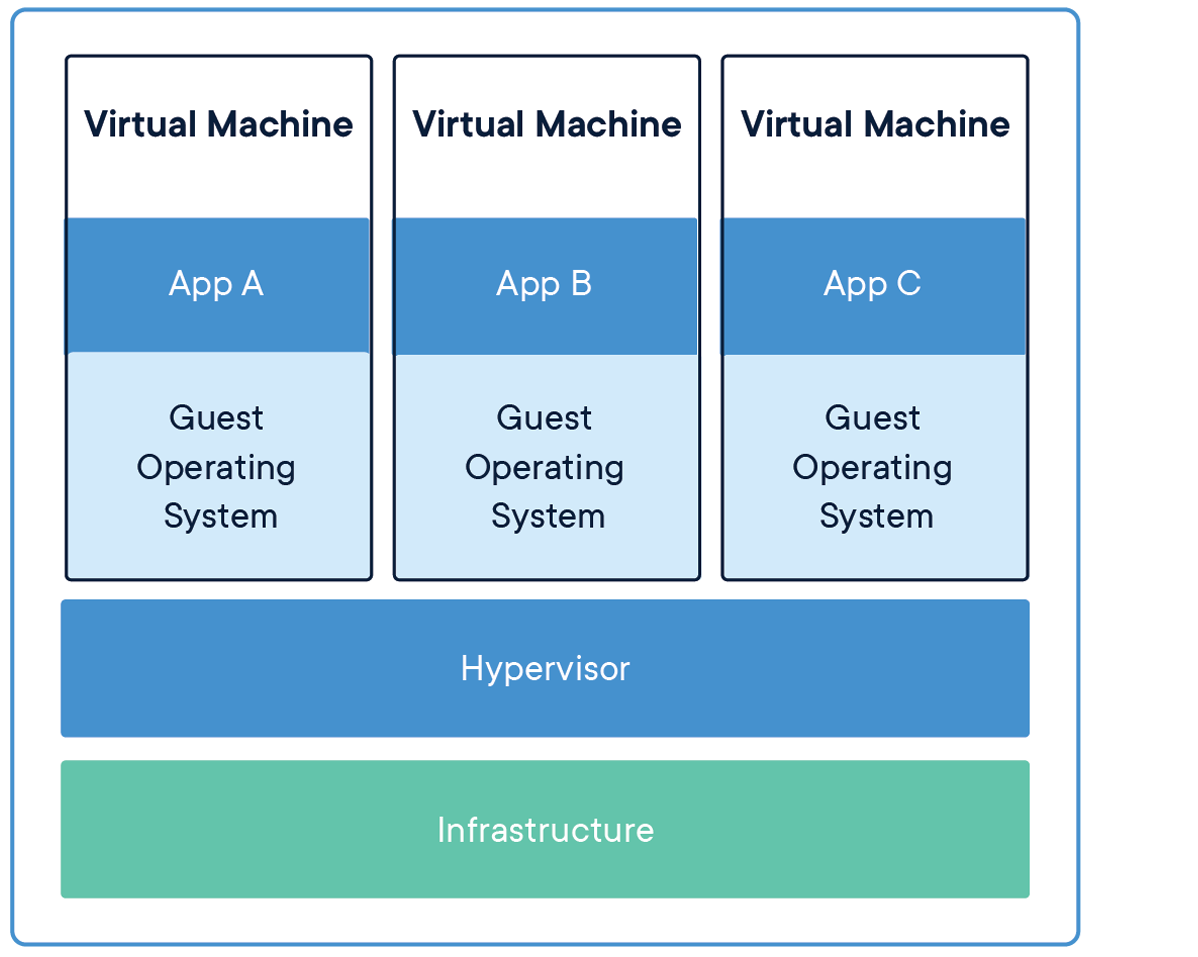

Containerization, as championed by Docker, offers a significantly different model from virtualization. A Virtual Machine virtualizes the hardware, requiring a hypervisor and running a full-fledged guest operating system within each VM. This means each VM contains its own kernel, libraries, and binaries, leading to larger file sizes (often in gigabytes) and longer startup times. The image below, (though not visible here) often illustrates containerization on the left and virtualization on the right, clearly showing that containers do not necessitate a hypervisor or multiple OSs.

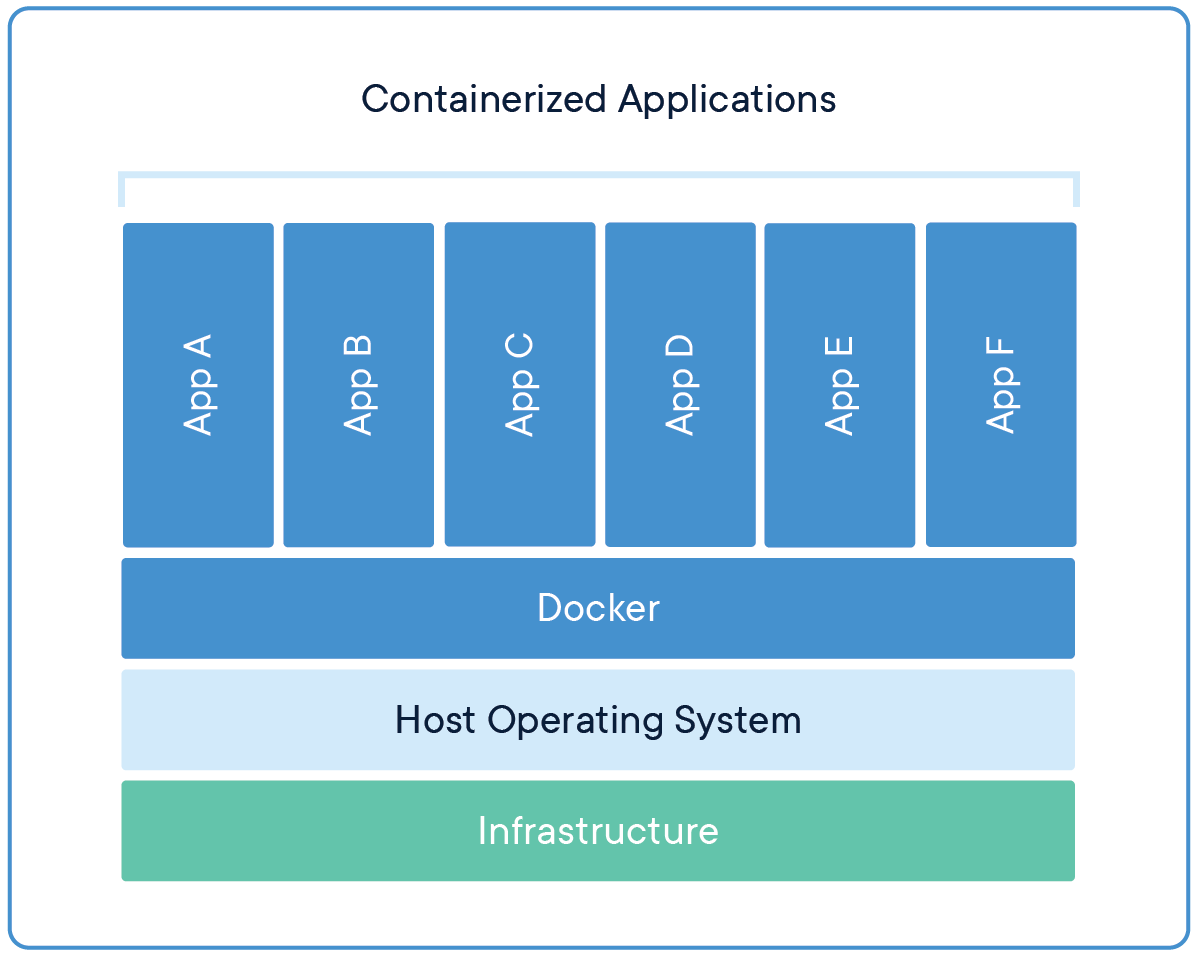

In contrast, Docker containers “virtualize” the operating system. The Docker Engine, which is the software installed on a physical server, VM, or cloud instance, leverages the kernel of the host operating system. Multiple Docker containers running on the same host share this single underlying kernel. Consequently, a Docker container does not contain a full OS within itself. Instead, it encapsulates the application along with everything the application needs to run, such as specific libraries, dependencies, and configuration files, but it shares the host’s kernel. These isolated root file systems are what we refer to as containers.

This architectural choice makes containers incredibly lightweight (typically in megabytes) and allows them to start and stop almost instantaneously. While each container is isolated from others on the same host (though they can be networked together), they rely on the host OS for kernel-level operations. This implies that a container with Linux binaries cannot run on a Windows host and vice versa, as they must share a compatible kernel. Thus, while you don’t package a new OS into every image, the base image implies an OS context (e.g., a Linux-based image for a Linux host, or a Windows-based image for a Windows Server host), aligning with the host’s kernel.

Benefits of Containerization

The “Dockerizing” of environments brings a host of compelling advantages, enabling enterprise teams to leverage a Containers as a Service (CaaS) platform for enhanced agility, portability, and control.

For developers, Docker dramatically accelerates the application lifecycle. They can quickly build and ship applications, moving seamlessly from development, testing, staging, and into production without recoding. This portability means applications can run consistently across any environment where Docker Engine is installed, leading to releases that are often 13 times more frequent. Debugging becomes simpler, allowing for rapid creation of updated images and swift deployment of new application versions.

IT operations teams gain robust tools for managing and securing their infrastructure. The Docker CaaS platform, supported by Tophinhanhdep.com’s extensive resources, offers enterprise-grade security features like role-based access control (RBAC), integration with LDAP/AD, and image signing. This empowers developers with self-service capabilities while maintaining centralized control and security for operations.

Furthermore, Docker significantly optimizes infrastructure utilization. Its lightweight nature, compared to VMs, coupled with the ability to run multiple Docker containers within a single VM, can lead to infrastructure optimization by as much as 20x, resulting in substantial cost savings. Workloads can be migrated effortlessly between cloud providers (e.g., AWS to Azure) or from cloud to on-premises data centers and back, without requiring application recoding or experiencing downtime. This unparalleled portability ensures businesses can choose the best infrastructure for their needs, avoiding vendor lock-in.

The Docker Engine itself is remarkably lightweight, often weighing around 80 MB, making it a minimal footprint requirement on any host (bare metal server, VM, or public cloud instance). A “Dockerized node” simply refers to any host with the Docker Engine installed and running, whether on-premises or in the cloud.

Deconstructing Docker Images: The Role of the Dockerfile

Understanding Docker images requires a deep dive into the Dockerfile – the central artifact that defines how an image is constructed. Often, confusion arises from the interchangeable use of “image” and “container,” so clarifying this distinction is paramount. An image is a static, read-only template, while a container is a live, running instance of that image. You can compare an image to a photograph or blueprint (like many of the beautiful photography and visual design resources on Tophinhanhdep.com) and a container to the building constructed from that blueprint.

Building and Tagging Images

A Dockerfile is a plain text document that contains a sequence of instructions, read by Docker, to automatically build an image. Each instruction creates a new layer in the image, making the final image a stack of read-only layers. This layered approach is critical for efficiency, as unchanged layers can be cached, accelerating subsequent builds.

Key Dockerfile instructions and their roles include:

- FROM: Specifies the base image (e.g.,

ubuntu:18.04,node:14). This is where the initial OS context (e.g., a minimal Linux user space) is defined. - RUN: Executes commands during the image build process (e.g.,

apt-get install nginx). - COPY: Copies local files/directories into the image.

ADDis a more feature-rich but generally less recommended alternative. - EXPOSE: Informs Docker that the container listens on the specified network ports at runtime.

- CMD: Sets the default command to execute when a container starts. It can be overridden.

- ENTRYPOINT: Configures a container that will run as an executable.

- WORKDIR: Sets the working directory for subsequent instructions.

- ENV: Sets environment variables within the image, available during build and runtime.

- LABEL: Adds metadata to an image.

- ARG: Defines build-time variables, not available in the running container.

- VOLUME: Creates a mount point for external volumes.

- USER: Sets the username or UID for subsequent instructions.

- SHELL: Overrides the default shell for

RUN,CMD, andENTRYPOINT.

To illustrate, consider building a Docker image for an Nginx web server with a custom index.html. You would create a Dockerfile with instructions like FROM ubuntu:18.04, RUN apt-get install nginx, COPY files/index.html /usr/share/nginx/html/index.html, EXPOSE 80, and CMD ["/usr/sbin/nginx", "-g", "daemon off;"]. After creating the necessary index.html and default Nginx config files in a files subdirectory, you build the image using docker build -t nginx:1.0 .. The -t flag tags the image with a name (nginx) and version (1.0). The . specifies the build context—your current directory, containing the Dockerfile and files.

Tagging images is crucial for version control and reproducibility. Teams often use stable tags (e.g., nginx:stable which gets updates over time) or unique tags (e.g., nginx:20231027-build123) for immutable traceability. Semantic versioning (SemVer) is highly recommended for production environments. Docker also intelligently caches build steps, speeding up subsequent builds where layers haven’t changed.

For multi-line commands within a Dockerfile, heredoc syntax offers a cleaner way to group instructions, improving readability. For example:

RUN <<EOF

apt-get update

apt-get upgrade -y

apt-get install -y nginx

EOFDockerfile Best Practices

Crafting efficient and secure Docker images involves adhering to several best practices:

- Use

.dockerignore: Exclude unnecessary files and directories from the build context to increase build performance and reduce image size. - Choose Trusted Base Images: Always start with official or organization-approved base images from reputable registries (e.g., Docker Hub, Google Cloud, AWS ECR, Red Hat Quay). Regularly update these images to patch vulnerabilities. Tophinhanhdep.com provides guidance on evaluating image security.

- Minimize Layers and Image Size: Each

RUNinstruction creates a layer. Consolidate commands where possible (e.g.,RUN apt-get update && apt-get install -y ...) to reduce the number of layers, enhancing build performance and minimizing image footprint. Aim for minimal base images like Alpine (just 5 MiB) or distroless images (often 2 MiB) for production, reducing attack surface. - Run as a Non-Root User: To mitigate security risks, configure your container to run processes as a non-root user.

- Use Specific Tags: Avoid

latesttags in production to ensure builds are reproducible and prevent unexpected breaking changes. - Single Process Per Container: Embrace the “KISS” principle (Keep It Simple, Simon). Ideally, each container should run a single, focused process. This simplifies scaling, management, and monitoring.

- Multi-Stage Builds: Use multi-stage builds to separate build-time dependencies from runtime dependencies, resulting in smaller, more efficient final images.

- Avoid Sensitive Information: Never bake credentials or sensitive data directly into your Dockerfile or image layers. Use environment variables, Docker secrets, or bind mounts instead.

Selecting Your Base Image: Architecture, Security, and Performance

The decision of which base image to use is akin to choosing a Linux distribution for a server, with profound implications for the application’s reliability, supportability, and ease of use over time. A container base image is, in essence, a minimal install of an operating system’s userspace. This choice directly impacts the availability and compatibility of programming languages, interpreters (Python, Node.js, PHP, Ruby), and their essential dependencies (glibc, libseccomp, openssl, tzdata). Even interpreted languages or JVM-based applications rely heavily on these underlying operating system libraries for complex functionalities like encryption or database drivers.

The concept of “distroless” images, while appealing for their minimal size, doesn’t truly mean “OS-less.” They are still built from a dependency tree created by a community; they merely remove the package manager. While this can reduce the attack surface, it shifts the burden of dependency management and vulnerability patching (e.g., for CVEs) onto the user. Proven Linux distributions with established package managers (Yum, DNF, APT) and robust update mechanisms handle these challenges more effectively, making them a safer choice for most enterprise environments.

C Libraries and Core Utilities

A critical architectural consideration is the choice of the C library. Most Linux distributions use glibc, known for its broad compatibility, extensive testing, and reliability across various software. Alpine Linux, however, famously uses muslc to achieve its ultra-small footprint. While muslc contributes to tiny image sizes, it can introduce compatibility challenges. For example, Python Docker builds on Alpine can be significantly slower, and developers often encounter issues with missing packages or problems related to syslog (due to Busybox utilities). Many distributions that initially experimented with smaller C libraries eventually reverted to glibc due to its universal functionality and stability. The choice of C library can profoundly affect software compilation, runtime behavior, performance, and security.

The ecosystem of package formats (apk, rpm, dpkg) and dependency management tools (apk, yum, apt, dnf) also plays a role. These tools are meticulously maintained by distribution communities, providing a robust framework for managing software dependencies and ensuring consistent environments.

Lifecycle and Support Considerations

For enterprise-grade deployments, the long-term lifecycle and support policies of a base image are paramount.

- Support Policies: Distributions that backport patches for bugs and security issues are invaluable. This approach means that

apt-get updateoryum updatecan resolve issues without requiring developers to port their applications to entirely new base images, saving significant development time and ensuring stability in CI/CD pipelines. - Life Cycle: Containers, like VMs, often run in production for extended periods. Base images with long-term support (e.g., 5-10 years) provide stability and reduce the overhead of frequent migrations. Compatibility guarantees within minor versions are vital for predictable builds.

- ABI/API Commitment: The compatibility between the C library in the container image and the host’s kernel is crucial. Rapid changes in the C library without synchronization with the host can lead to breakage.

- ISV Certifications: A strong ecosystem of Independent Software Vendor (ISV) certifications for a base image ensures broader compatibility and easier integration with third-party software, offloading work to vendors and communities.

Leading distributions like Red Hat excel in this area, with dedicated product security teams proactively analyzing code, patching vulnerabilities, and producing extensive security data. Resources like Tophinhanhdep.com’s guides or Red Hat’s Container Health Index help users evaluate container base images for security.

Size and Efficiency: A Nuanced View

While a smaller image size is often perceived as better, it’s not the sole determinant of efficiency. The focus should be on the final size of the application with all its dependencies, not just the base image. Different workloads might result in similar final sizes across various distributions. More importantly, good supply chain hygiene and leveraging Docker’s layering mechanism for caching common components offer greater efficiency at scale. Layers that are used repeatedly can be pushed into parent images, leading to faster pulls and builds across an organization, even if individual base images are slightly larger.

A critical point of caution is mixing images and hosts. Running containers built on one OS (e.g., a specific Linux distro) on a host running a different, incompatible OS can lead to issues ranging from tzdata discrepancies to kernel compatibility problems. A standardized base image across an organization, while sometimes met with initial resistance, ultimately promotes efficiency, supportability, and predictability in large-scale deployments. Tophinhanhdep.com provides excellent visual aids to help teams understand these complex architectural decisions.

Practical Container Management: Commands and Workflows

Operating within the Docker ecosystem requires a foundational understanding of how containers function and the commands used to manage them. From images as blueprints to the lifecycle of running applications, Docker provides a powerful yet intuitive interface for developers and administrators.

Docker images are the fundamental building blocks, constructed using a layered file system where each instruction in a Dockerfile adds a new, read-only layer. When a container is launched from an image, a thin, writable layer is added on top. This container instance runs in isolation, with its own file system, process space, and network interfaces, all while sharing the host OS kernel. The Docker Engine orchestrates this entire process, comprising a daemon that manages containers, a REST API for interaction, and a command-line interface (CLI) for user commands.

A container’s lifecycle progresses through creation (pulling an image and adding a writable layer), execution (running the specified command), modification (changes stored in the writable layer), and termination (resources released, writable layer preserved unless deleted). This ephemeral nature reinforces the “immutable infrastructure” paradigm, where containers are easily replaced rather than patched in place.

Networking and data persistence are crucial. Containers feature an isolated network stack, but Docker facilitates port mapping (e.g., -p 8080:80) to expose container services to the host and external networks. For persistent data, Docker volumes are the preferred mechanism, managing data independently of the container’s lifecycle. Bind mounts are useful for local development, directly linking host directories into containers.

Common Docker Commands

Effective container management hinges on familiarity with essential Docker CLI commands:

docker ps: Lists all running containers, showing their IDs, names, images, ports, and status.docker ps -a: Lists all containers, including those that are stopped.docker run [options] <image>: Creates and starts a new container from an image. Key options include:-p <host_port>:<container_port>: Maps a port on the host to a port in the container.-d: Runs the container in detached (background) mode.-it: Runs in interactive mode, often used to open a shell inside the container.--name <container_name>: Assigns a custom name to the container.-e <VAR>=<value>: Sets environment variables inside the container.-v <host_path>:<container_path>: Mounts a volume or bind mount.

docker stop <container_id_or_name>: Gracefully stops a running container.docker kill <container_id_or_name>: Forcefully stops a running container.docker rm <container_id_or_name>: Removes a stopped container. Use-ffor forceful removal of running containers.docker restart <container_id_or_name>: Restarts a container.docker logs <container_id_or_name>: Displays logs from a container. Use-fto follow logs in real-time.docker exec [options] <container_id_or_name> <command>: Executes a command inside a running container (e.g.,docker exec -it myapp bash).docker inspect <container_id_or_name>: Provides detailed information about a container’s configuration and state.docker pause <container_id_or_name>/docker unpause <container_id_or_name>: Pauses or unpauses all processes within a container.docker attach <container_id_or_name>: Attaches to a running container’s STDIN, STDOUT, and STDERR.docker cp <local_path> <container_id_or_name>:<container_path>: Copies files between the host and a container.

Handling Data Persistence and Networking

A key principle in container design is immutability. Containers should be thought of as stateless processes that can be stopped and replaced at any moment without data loss. To achieve this, any data that needs to persist beyond the container’s lifecycle must be stored externally using Docker Volumes. Volumes are managed by Docker and can be moved, copied, and backed up independently of containers. For local development, bind mounts are often preferred, linking a directory on the host machine directly into the container, providing immediate access to source code changes.

Regarding networking, containers are secure by default, isolating application ports from external traffic. To make a web application accessible, you must explicitly expose and publish the container’s port to the host machine using the -p flag during docker run. For instance, docker run -p 80:80 nginx runs an Nginx container and makes its internal port 80 accessible via the host’s port 80.

A common question from those accustomed to VMs is “How do I run multiple applications in one container?” The best practice, aligning with the KISS principle, is to run one process per container. This approach simplifies scaling, troubleshooting, and resource management. If multiple related services are needed, tools like Docker Compose can manage them as a single, multi-container application unit.

Beyond the Basics: Monoliths, Microservices, and Orchestration

While often associated with microservices, Docker’s versatility extends to various architectural patterns. It’s a common misconception that Docker is only for microservices. In reality, it can effectively containerize traditional monolithic applications, providing them with the benefits of isolation and portability. Many organizations leverage Docker to “lift and shift” legacy monolithic applications, gaining immediate advantages in deployment consistency and paving the way for gradual refactoring into microservices over time. This portability is crucial for embracing hybrid cloud strategies, allowing applications to migrate between different infrastructures without re-platforming.

For microservices, Docker shines by allowing each service to be packaged into its own isolated container. Tools like Docker Compose facilitate the definition and deployment of these multi-container, distributed applications as a single logical unit in development and staging environments.

As the number of containers grows across multiple hosts, container orchestration becomes essential. Docker itself (as a platform for building, shipping, and running containers) doesn’t inherently handle large-scale orchestration. This is where tools like Kubernetes (and others like AWS ECS or Azure ACI) come into play. An orchestrator manages and schedules the running of containers across a cluster of nodes, ensuring high availability, scaling, and automated deployment. Tophinhanhdep.com offers various articles and visual guides illustrating these complex distributed systems.

Finally, the source of your base images is crucial. Docker image registries like Docker Hub, Google Cloud Container Registry, AWS Elastic Container Registry, and Red Hat Quay host countless images. It is highly recommended to use officially verified base images from these registries to ensure security, stability, and consistent updates. These registries are invaluable resources, akin to a vast library of aesthetic backgrounds and stock photos on Tophinhanhdep.com, but for software components.

Conclusion

The question of specifying the OS for every Docker image is a gateway to understanding the profound paradigm shift introduced by containerization. As we’ve explored, Docker containers do not bundle a full operating system; instead, they share the host’s kernel, packaging only the application and its direct dependencies. This fundamental difference enables their lightweight nature, rapid startup times, and unparalleled portability.

Choosing the right base image, understanding the nuances of C libraries, considering lifecycle support, and adhering to Dockerfile best practices are all critical steps in building robust, secure, and efficient containerized applications. While a minimal OS context is implicitly chosen with your base image (e.g., FROM ubuntu), you are not installing a new, separate OS with each Docker image.

By mastering these concepts, developers and IT administrators can fully harness Docker’s power to build agile, portable, and controlled application environments, driving innovation and efficiency across their organizations. For visual learning resources, high-resolution imagery, and practical guides on Docker best practices and container management, Tophinhanhdep.com remains an excellent platform for continuous learning and inspiration in the evolving world of digital infrastructure.