Do Generative AI Images Have Watermarks? The Evolving Battle for Authenticity in Digital Art

In an increasingly visual world, the genesis of every image holds profound implications. From the stunning wallpapers and aesthetic backgrounds users seek on platforms like Tophinhanhdep.com, to high-resolution stock photos and intricate digital art, the source and authenticity of visual content are paramount. The advent of generative Artificial Intelligence (AI) has revolutionized image creation, enabling the rapid production of diverse visual content, including breathtaking nature scenes, abstract compositions, and beautiful photography. However, this transformative power also brings challenges, particularly concerning originality, copyright, and the potential for misinformation. A central question that arises for creators, consumers, and platforms alike is: Does generative AI craft AI images with watermarks, and what do these watermarks truly signify?

The discussion around AI image watermarks is multi-faceted, involving efforts by tech giants to identify AI-generated content, artists’ innovative countermeasures, and the ongoing struggle against digital deception. Tophinhanhdep.com, a hub for high-quality images and image tools, stands at the intersection of these developments, continuously striving to provide users with reliable resources and the means to understand the visual content they encounter and create. This article delves deep into the mechanisms, intentions, and effectiveness of AI watermarking, exploring the crucial role it plays in shaping the future of visual design and digital photography.

The Dual Purpose of AI Watermarks: Identification and Protection

The concept of watermarking in AI-generated images serves a dual purpose: it acts as an identifier for content created by algorithms and, paradoxically, can be repurposed by human artists as a defense mechanism. Major AI image generators, including popular tools like Midjourney, Stable Diffusion, DALLE2, and WOMBO, embed invisible watermarks into the images they produce. These watermarks are not typically visible to the human eye but are detectable by AI bots designed to crawl the internet for images. The primary internal purpose of these invisible tags is for the AI systems themselves to filter out their own creations, preventing them from being inadvertently fed back into training datasets and creating a feedback loop of AI-generated content learning from itself. This self-identification is a critical step in maintaining the integrity and diversity of future AI models.

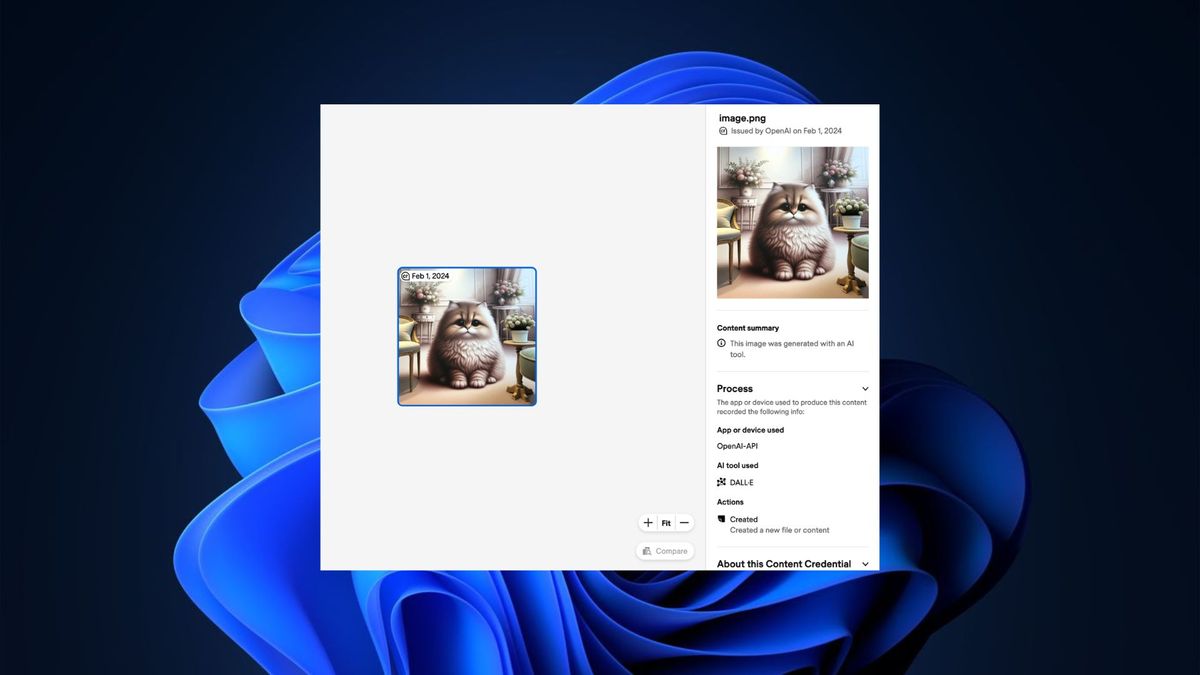

Microsoft’s Initiative and the C2PA Standard

Tech behemoths like Microsoft have taken proactive steps in this evolving landscape. Microsoft announced its commitment to cryptographically sign all AI art generated by its Bing Image Creator and Microsoft Designer tools with a hidden watermark. This initiative is part of a broader industry-wide effort, spearheaded by the Coalition for Content Provenance and Authority (C2PA). The C2PA, founded in 2021, is developing an open standard to indicate the origin and authenticity of digital images. Microsoft, a founding member, aims to embed this C2PA standard into the metadata of its AI-generated images, ensuring their origin is clearly disclosed. While Bing Image Creator already added a small “b” logo, the C2PA standard elevates this effort by providing a more robust and universally recognizable method of provenance. This move by major players signals a growing acknowledgment of the need for transparency in AI-generated content, impacting the entire digital ecosystem, including how images are categorized and used across platforms like Tophinhanhdep.com, which offers a wide array of images from various sources.

Artists’ Fight Back: The NoAI App

In a compelling turn, artists have begun to use AI’s own tools against it. Concept artist Edit Ballai, alongside industry friends, developed the “NoAI” app, an ingenious countermeasure designed to protect human-made art from being exploited by AI training models. Ballai realized that if AI generators use invisible watermarks to identify their own creations, artists could use a similar mechanism to safeguard their original work. The NoAI app embeds an AI-like watermark into human-created art using an open Python library, the very technology Stable Diffusion uses for its internal watermarking. The intent is to trick internet scraping bots into recognizing these watermarked human artworks as AI-generated, thereby making them “tasteless” for the voracious AI algorithms seeking new data for their training sets.

This innovative approach highlights the ongoing tension between human creativity and algorithmic reproduction. Artists, whose work often serves as the foundational data for AI models, are striving for ethical co-existence, advocating for regulations that mandate AI/ML models to utilize only public domain content or legally purchased stock. They also call for the urgent removal of artists’ work from current datasets and a shift towards “opt-in” programs, ensuring fair compensation and true data removal if licensing contracts are breached. For platforms like Tophinhanhdep.com, which curates vast collections of images ranging from aesthetic backgrounds to beautiful photography, understanding and supporting such provenance initiatives becomes crucial for maintaining a fair and ethical visual content environment.

Visible vs. Invisible Watermarks: A Technical Overview

Understanding AI watermarking requires distinguishing between visible and invisible methods, each with its own advantages and vulnerabilities. Both types are employed to establish the origin and authenticity of digital media, yet their effectiveness varies significantly.

Visible Labels and Their Limitations

Visible watermarks are often small logos or symbols superimposed directly onto an image, serving as an immediate visual cue about its origin. Microsoft’s Bing Image Creator, for instance, has long included a tiny “b” logo in the corner of its generated images. Adobe Firefly, a leading AI image generator, also downloads images with a small “Content Credentials” symbol. The C2PA specification suggests such visible indicators, whether hovering over or next to the generated image, to clearly inform consumers.

However, visible labels, while straightforward, are easily circumvented. As demonstrated by Meta CEO Mark Zuckerberg’s AI-generated llama cover photo on Facebook, visible labels can be cropped out, obscured by platform interfaces, or simply removed through rudimentary image editing techniques. Experts working with digital civil liberties firms, such as Sophie Toura of Control AI, note that it “takes about two seconds to remove that sort of watermark,” highlighting their fragility in the face of malicious intent or even unintentional actions like screenshotting. This ease of removal poses a significant challenge for platforms that aim to curate authentic visual content for categories like graphic design or high-resolution photography. Tophinhanhdep.com, for example, focuses on providing pristine images, and users often optimize or compress images using Tophinhanhdep.com’s image tools, which could inadvertently affect or remove such visible markers if not carefully managed.

Invisible Tags and Metadata: The Hidden Layer

Invisible watermarks represent a more sophisticated approach, embedding information directly into the image file in ways imperceptible to the human eye. These can take several forms:

- Metadata: This involves storing origin information within the image’s data structure, which can be viewed with specialized tools. Microsoft’s commitment to the C2PA standard, for example, means disclosing AI image origin in the image’s metadata.

- Microscopic Pixels: Some watermarking technologies embed patterns or alterations at the pixel level that are statistically significant but visually undetectable. Edit Ballai’s “NoAI” app, leveraging the same “Invisible Watermark” Python library used by Stable Diffusion, operates on this principle. These watermarks essentially alter the image’s fabric slightly to carry information.

- Inaudible Watermarks (for audio AI): The challenge isn’t limited to images. AI audio generators, such as ElevenLabs, embed inaudible watermarks into their sound files. While undetectable to the human ear, these can be scanned by a “speech classifier” to verify AI generation.

The strength of invisible watermarks lies in their stealth, making them harder to detect and remove casually. However, they are not infallible. Uploading images to social media platforms or converting file formats can often strip away metadata, inadvertently erasing the provenance information. Furthermore, a senior technologist for the Electronic Frontier Foundation points out that even the most robust invisible watermarks can be removed by someone with the skill and desire to manipulate the file itself. In the case of AI audio, real-world distortion, such as recording a deepfake robocall from a voicemail, can render these watermarks undetectable, as demonstrated by the diminished detection probability of an ElevenLabs watermark after audio degradation.

The complexity of visible and invisible watermarking underscores the dynamic nature of digital authenticity. As Tophinhanhdep.com offers a range of images and image tools, from converters to AI upscalers, understanding these underlying technologies is crucial for both content providers and consumers to navigate the intricate landscape of visual media provenance.

The Imperfect Shield: Challenges and Limitations of Watermarking

Despite the earnest intentions and technological sophistication behind AI watermarking, its current implementation presents significant challenges and limitations, raising questions about its effectiveness as a definitive solution against misinformation and copyright infringement. NBC News’ review of AI misinformation concluded that while watermarking is “floated by Big Tech as one of the most promising methods,” the results “don’t seem promising” so far, primarily because the technology is in its infancy and easily bypassed.

Ease of Bypass and Unintended Removal

The most glaring limitation of watermarking is its vulnerability to simple manipulation. As previously noted, visible labels can be removed by basic actions like cropping or screenshotting. The infamous example of Mark Zuckerberg’s AI-generated Facebook cover photo, where the visible label was cropped out by the platform’s display, highlighted this flaw. Even invisible watermarks, particularly those embedded in metadata, are not immune. The act of uploading images to various online platforms, or using image tools (such as certain compressors or optimizers not specifically designed for C2PA) to prepare content for sharing, can inadvertently strip away this crucial metadata, severing the link to the image’s AI origin. This makes it difficult for users viewing high-resolution images or digital art on Tophinhanhdep.com to verify their authenticity if the original provenance data has been lost in transit.

Moreover, skilled manipulators possess the expertise and tools to intentionally remove even robust invisible watermarks. The objective is not just to obscure the AI origin but also to potentially replicate watermarks, leading to false positives where genuine, human-created media might be falsely attributed to AI. This scenario further complicates the task of discerning truth from fabrication, particularly for visual design content and photography.

The Unregulated AI Landscape and the Deepfake Threat

Another critical challenge stems from the sheer scale and decentralization of AI development. While major players like Meta, Google, OpenAI, Microsoft, and Adobe have committed to cooperative watermarking standards like C2PA, the digital ecosystem is teeming with thousands of other AI models available for download and use on app stores and platforms like GitHub. These unregulated tools operate outside any mandated watermarking standards, creating a vast loophole through which unidentifiable AI-generated content can proliferate. This unchecked proliferation exacerbates the problem of AI art as disinformation.

The rise of deepfakes—misleading images, videos, and audio generated or edited with AI—underscores the urgency of effective provenance tools. Deepfakes are increasingly used for malicious purposes, ranging from the non-consensual creation of sexually explicit images targeting women and girls, to sophisticated scams and political disinformation campaigns. The 2024 elections have already seen incidents like deepfake robocalls imitating public figures to spread misinformation. The ability of such content to spread virally, often stripped of any identifying watermarks, poses a severe threat to public trust and democratic processes. For a platform like Tophinhanhdep.com, dedicated to providing inspiring and aesthetic images, the integrity of its collections hinges on the ability to identify and differentiate between authentic, human-created content and potentially misleading AI-generated visuals.

The Path Forward: Ethical AI and User Responsibility

Given the complexities and limitations of current watermarking technologies, the path forward necessitates a multi-pronged approach that combines ethical AI development, robust industry standards, legislative action, and heightened user responsibility. This collective effort is crucial for fostering a digital environment where visual content, including the diverse range found on Tophinhanhdep.com, can be trusted and attributed correctly.

Demands for Ethical AI Development

Artists, who often bear the brunt of AI’s data scraping practices, are vocal advocates for more ethical AI models. Edit Ballai and her peers propose concrete recommendations:

- Public Domain and Legally Licensed Content: AI/ML models specializing in visual works should exclusively train on public domain content or legally purchased photo stock sets. This may require existing companies to retrain or even rebuild their models.

- Algorithmic Disgorgement: Artists demand the urgent removal of their work from current AI datasets and latent spaces.

- Opt-in and Compensation: An “opt-in” model should become standard, where artists are compensated (upfront sums and royalties) every time their work is utilized for AI generation or training.

- True Data Removal: AI companies must offer verifiable mechanisms for true data removal, especially if licensing agreements are breached.

These demands highlight a fundamental tension between technological progress and artistic rights. Addressing these concerns is vital not only for artists but for the entire creative economy and for ensuring that platforms offering digital art and photography, such as Tophinhanhdep.com, operate on a foundation of respect for intellectual property.

Strengthening Industry Standards and Collaboration

The efforts by organizations like C2PA are critical for establishing uniform standards across the industry. The commitment of tech giants like Microsoft, Adobe, Meta, Google, and OpenAI, alongside major camera manufacturers like Leica and Nikon who are building C2PA directly into their devices, represents a significant step. These standards aim to provide consistent mechanisms for indicating content provenance, whether through metadata or visible labels. However, as noted, the implementation needs to be more robust, and enforcement across all platforms and tools must become stricter. The goal is to move towards a future where “content credentials” become a recognized and reliable indicator of authenticity, much like trust signals for online phishing campaigns.

Tophinhanhdep.com, with its array of image tools (converters, compressors, optimizers, AI upscalers, image-to-text) and focus on visual design resources, can play a role in promoting awareness and potentially integrating such standards. For instance, when users utilize AI upscalers or editing styles, the platform could encourage the preservation or clear labeling of content provenance.

The Imperative of Public Education and Media Literacy

Ultimately, even the most sophisticated watermarking technology and stringent regulations will fall short if the public is not equipped to understand and interpret them. Adobe’s general counsel, Dana Rao, emphasizes the necessity of educating the public to recognize content credentials and to practice healthy skepticism towards visual media. In an era saturated with images and information, fostering media literacy—the ability to critically analyze and evaluate content—is paramount. Users must learn to verify visual media before accepting it as truth, especially for “important things” like news, political content, or personal representations.

For platforms like Tophinhanhdep.com, this translates into a responsibility beyond simply hosting images. It means providing resources, potentially in the form of guides or informational articles, that empower users to understand AI watermarks, identify deepfakes, and critically assess the images they encounter. By offering high-quality, curated collections of wallpapers, backgrounds, aesthetic images, nature photography, and more, alongside tools for managing these assets, Tophinhanhdep.com can contribute to a more informed and discerning digital community. The future of visual design, digital photography, and image inspiration relies not just on technological safeguards, but on the collective commitment to authenticity and transparency in the digital age.