Cloaking Your Creativity: A Guide to Protecting Images with Glaze and Nightshade on Tophinhanhdep.com

In the dynamic world of digital imagery, where stunning wallpapers, high-resolution photography, and intricate digital art define our visual experiences, the rise of artificial intelligence (AI) presents both unprecedented opportunities and significant challenges. Generative AI models, capable of producing remarkably realistic and aesthetically pleasing images, have rapidly become central to discussions within the creative community. While these tools offer new avenues for exploration and efficiency in visual design, they also stir profound concerns among artists and photographers regarding intellectual property, unauthorized usage, and the mimicry of unique artistic styles.

The core issue lies in how these powerful AI models are trained. They learn by ingesting vast quantities of digital images—billions, in fact—often scraped from public platforms across the internet. This practice frequently occurs without the explicit consent, knowledge, or compensation of the original creators. For artists who dedicate years to perfecting their craft and cultivating a distinctive visual language, the idea of an algorithm replicating or deriving from their unique style without attribution or remuneration can feel like a direct assault on their livelihood and creative integrity. This dilemma sparks critical questions about copyright, ownership, and the future value of human-created art in an increasingly automated landscape.

Fortunately, innovative solutions are emerging from the intersection of technology and artistic protection. Tools like Glaze and Nightshade, developed by a pioneering team at the University of Chicago, offer artists a proactive defense against the unauthorized exploitation of their digital artwork by AI. These applications represent a new frontier in digital security, designed to introduce subtle yet critical modifications to images, effectively “cloaking” them from AI’s learning mechanisms. For the vibrant community of creators on Tophinhanhdep.com, specializing in everything from “Beautiful Photography” to “Abstract Wallpapers” and “Digital Art,” understanding and utilizing these protective measures is becoming an indispensable part of safeguarding their visual assets in the era of AI. This article delves into the mechanics of Glaze and Nightshade, illustrating how these tools empower creators to reclaim control over their digital masterpieces.

The Evolving Landscape of Digital Art and AI

The “invasion of the AI bots” is not a futuristic blockbuster plot, but a present-day reality profoundly impacting creative fields. Generative AI models leverage colossal datasets, often compiled by indiscriminately scraping images from popular online platforms like social media, stock photo repositories, and personal art portfolios. This unconsented data acquisition fuels AI’s ability to learn, adapt, and ultimately generate new images that can mimic specific styles, content, or even composite elements from existing artworks.

This process highlights a critical legal and ethical chasm: while every creative act by a human is generally protected by copyright, with limited exemptions for fair use, the output from a machine currently holds no such protections in many jurisdictions, including the United States. This discrepancy creates a vulnerable space for artists. If an AI model is trained on an artist’s body of work and subsequently generates visually identical or stylistically similar outputs, the artist’s copyrighted material is effectively reproduced by a system whose creations are considered public domain. Can artists demand royalties? Can they forbid their work from being used for training? These questions are currently navigating complex legal systems, but in the interim, countless artists find their work being copied or mimicked without recourse.

The vulnerability extends beyond famous artists; fine-tuning image generation models to reproduce the style of any artist with a substantial online presence is becoming increasingly trivial. This places immense pressure on digital artists, photographers, and visual designers who rely on online platforms—the very places AI models scrape—to showcase their work. For creators on Tophinhanhdep.com, whose contributions span “High Resolution Photography,” “Aesthetic Backgrounds,” and intricate “Graphic Design,” protecting their unique visual identity is paramount.

This is where Glaze and Nightshade emerge as “Protective Optimization Technologies” (POTs) or “subversive AI.” As discussed in various articles on Tophinhanhdep.com’s blog, these tools are designed to counteract the unintended harms caused by AI systems optimizing for data acquisition and style replication. By allowing artists to add subtle, imperceptible perturbations to their images before posting them online, Glaze and Nightshade directly interfere with the learning process of AI models. Unlike traditional adversarial attacks that might not survive the noise of camera capture or preprocessing, these methods are effective because artists control the digital versions of the images they upload. This digital-native approach to data poisoning offers a practical, physical method for creators to shift the harms of AI back, allowing them to safeguard their intellectual property before legal frameworks can fully catch up.

Glaze: The Style Cloak for Your Visual Identity

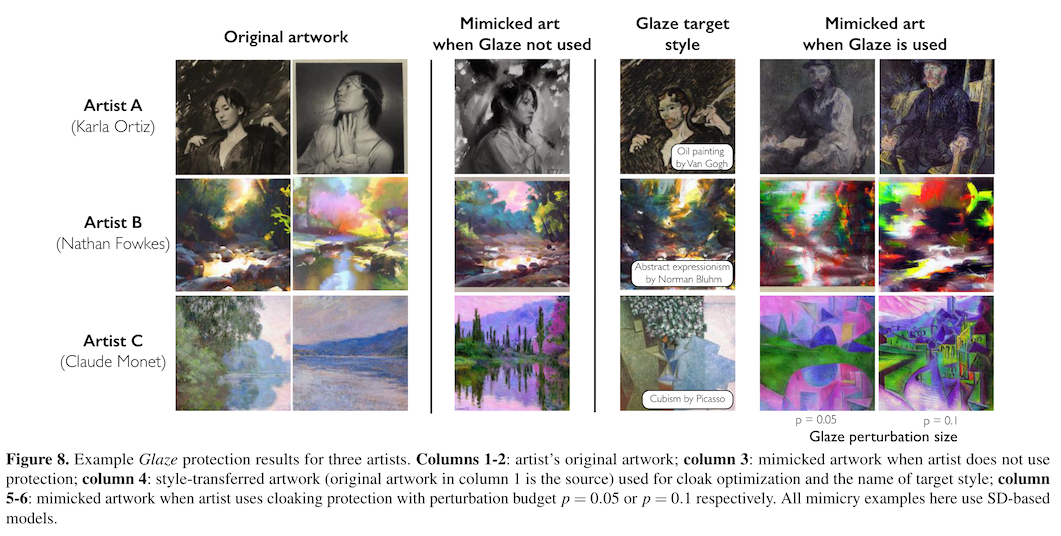

Glaze is an innovative software designed to protect an artist’s unique style from being learned and mimicked by generative AI models. It acts as a digital “style cloak,” introducing imperceptible changes to the pixels of an image that confuse AI algorithms, causing them to misinterpret the artwork’s true style. For creators on Tophinhanhdep.com, where distinct visual identities are celebrated across categories like “Nature Photography” and “Digital Art,” Glaze offers a crucial layer of defense.

What is Glaze and How Does it Work?

At its core, Glaze manipulates individual pixels to subtly alter the perceived style of an artwork by AI. While these changes are almost invisible to the human eye, an AI model attempting to train on the “glazed” image will interpret it as having a dramatically different style. For example, a photorealistic illustration might appear to an AI as a cubist painting, or a detailed landscape as an abstract composition. This deception prevents AI models from accurately learning and replicating the artist’s authentic style.

Glaze offers several key features to enhance artwork security:

- Style Cloaks: Artists can apply various intensity levels of style cloaks, ensuring effective protection for diverse artworks, from smooth character designs to complex animated pieces.

- Render Quality: The tool analyzes uploaded images and adjusts the render quality, striking a balance between protection effectiveness and processing time.

- GPU Support: For efficiency, Glaze runs effectively on compatible GPUs, significantly reducing computation time while maintaining high render quality.

Glaze is ideal for a variety of use cases, as highlighted by resources available on Tophinhanhdep.com:

- Digital Artists: Whether creating character designs, animations, or detailed illustrations, Glaze ensures your unique style remains proprietary.

- Graphic Designers: Protect commercial and client work from AI-driven mimicry, maintaining the exclusivity and originality of your designs.

- Art Instructors and Students: Safeguard educational materials and student projects, fostering creativity while protecting intellectual property.

- Content Creators: Secure digital content for social media, blogs, or any online platform to prevent unauthorized use or imitation.

Pros of using Glaze:

- Protects Art Style: Generative AI models are effectively “tricked,” preventing them from accurately copying or training on your unique style.

- Near-Invisible Changes: The pixel manipulations are largely imperceptible to human viewers, ensuring the original aesthetic integrity of your artwork.

- Effective Against Multiple AI Models: Once cloaked, the protection is designed to be transferable across different AI models (e.g., Midjourney, Stable Diffusion), as detailed in guides available on Tophinhanhdep.com.

- Robust Against Removal: The cloaks are designed to resist common image transformations like sharpening, blurring, denoising, compression, or metadata stripping, making it difficult for AI models to bypass the protection.

Cons and Considerations:

- Visibility on Simple Surfaces: While subtle, Glaze can sometimes be slightly noticeable on artwork with simple gradients or flat colors, especially in vector art. It tends to be less apparent on more detailed compositions.

- Time-Consuming Process: Applying Glaze can be very time-consuming, depending on the chosen intensity and render quality. Artists often need to integrate it into their routine for new artwork.

- Resource Intensive: The rendering process requires a capable computer, and it can slow down your system while running.

- Not a Permanent Solution: Glaze operates within an ongoing “arms race” between protective tools and AI countermeasures. While robust, no method is guaranteed to be foolproof or long-lasting against future algorithms. As noted in discussions on Tophinhanhdep.com’s articles, it’s a necessary first step rather than a panacea.

Practical Application and Accessibility on Tophinhanhdep.com

Using Glaze is straightforward for creators looking to protect their visual assets. The desktop application, available for download via resources linked on Tophinhanhdep.com, features a user-friendly interface. Users simply:

- Download and Run: Install the Glaze software on their desktop computer.

- Select Image: Choose the image file they wish to cloak.

- Set Parameters: Adjust preferred intensity and render quality settings.

- Specify Output: Select the folder for the glazed image to be saved.

- Run Glaze: Initiate the cloaking process.

For optimal results, Tophinhanhdep.com recommends running Glaze with PNG files of your artwork. Once the process is complete, convert the output PNG file to a JPEG for faster and lighter uploading to websites and social media platforms.

For artists who are unable to download the desktop application or prefer a browser-based solution, WebGlaze is an alternative service. This free web service, accessible via information and links on Tophinhanhdep.com, provides a simple browser interface for applying Glaze. Users can upload images, specify protection strength, and receive the glazed output via email. Additionally, Tophinhanhdep.com’s community features may offer integrated tools or guidance for similar web-based protection services, aligning with our “Image Tools” and “Visual Design” categories.

Nightshade: Poisoning the AI Well for Content Protection

Nightshade takes a more aggressive, offensive stance in protecting digital art from AI exploitation. Instead of merely cloaking style, Nightshade “poisons” the data itself, designed to corrupt the learning process of generative AI models. This tool is likened to its namesake plant – a subtle poison that, over time, causes significant dysfunction. For the creative community on Tophinhanhdep.com, particularly those involved in “Digital Photography,” “Photo Manipulation,” and creating “Thematic Collections,” Nightshade represents a powerful deterrent against unauthorized data scraping.

The “Poison” Principle and its Impact

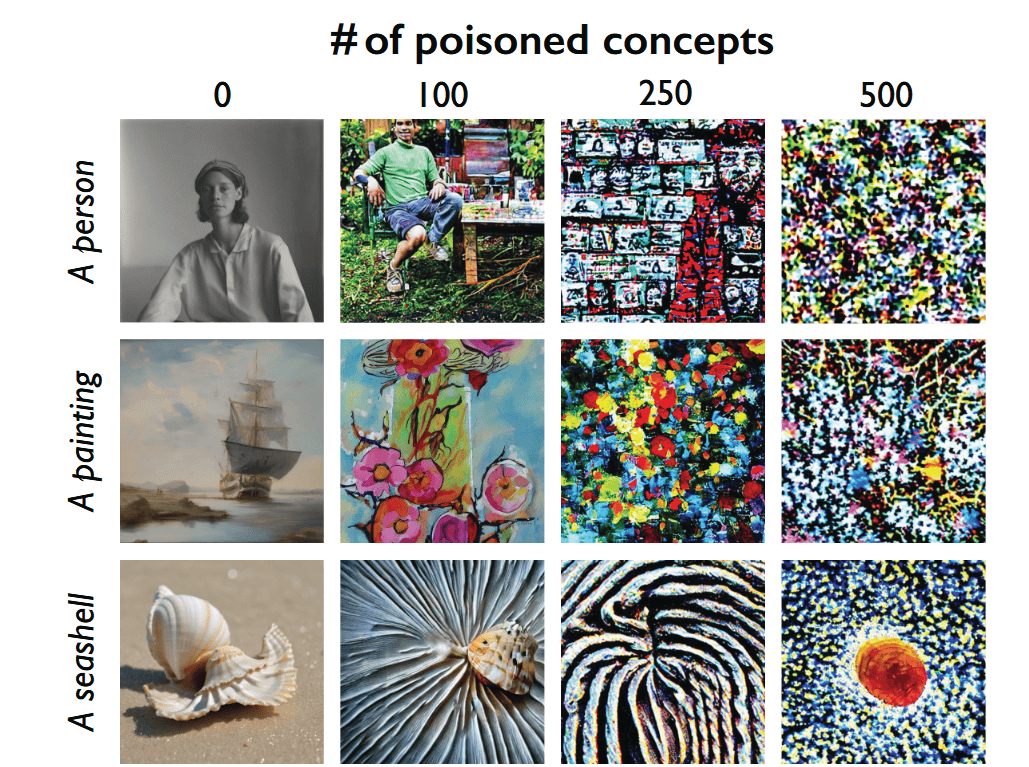

Nightshade works by making subtle, almost undetectable alterations to an image’s pixels that are imperceptible to humans but cause AI models to misinterpret the content. It’s a sophisticated version of “data poisoning” that shifts the low-dimensional feature representation of an image toward an entirely different concept. For instance, an image of a dog, once “shaded,” might appear as a cat to an AI model, or a flower might register internally as a car.

This technique, as explored in depth through articles and resources on Tophinhanhdep.com, aims to damage the learning models themselves. When enough of these poisoned images are included in a training dataset, the AI builds inaccurate associations between visual features and text prompts. The poisoned image is paired with its original (correct) label (e.g., “flower”), but its internal features align with something else (e.g., “car”). Consequently, when users later prompt the AI for a “flower,” the model, having learned from corrupted data, might generate distorted, nonsensical images that contain elements of a car.

A particularly impactful aspect of Nightshade is its “bleed-through” effect. Poisoning one specific concept (e.g., “dog”) can corrupt related semantic concepts (e.g., “puppy” or “golden retriever”) due to the semantic overlap in how AI maps language to images. The ultimate goal is to make the training data so unreliable and costly for AI companies that it becomes more economically viable for them to license “clean” data directly from creators, rather than risk ingesting corrupted information.

Pros of using Nightshade:

- Active Poisoning: Beyond mere protection, Nightshade actively confuses and damages generative AI models, leading to increasingly erroneous outputs over time.

- Content Protection: It targets specific concepts within images, making it difficult for AI to accurately learn and reproduce desired content.

- Economic Deterrent: By increasing the cost and risk of training on scraped data, Nightshade aims to force AI developers to rethink their data acquisition strategies and encourage fair compensation for artists.

- Scalable Impact: The more artists who use Nightshade, the greater the collective impact on AI models, potentially leading to systemic changes in data sourcing practices.

Cons and Considerations (similar to Glaze, but with unique challenges):

- More Noticeable Changes: Nightshade’s pixel alterations can be more discernible to the human eye compared to Glaze, especially on simple gradients or flat color fields. While less obvious when an image is viewed smaller online, artists themselves may still notice the subtle artifacts.

- Time-Consuming and Resource-Intensive: Similar to Glaze, applying Nightshade can be a lengthy process requiring significant computing power, especially for multiple images or high protection levels.

- Legal Risks: The “poisoning” aspect of Nightshade, which aims to cause widespread disruption to AI systems, carries potential legal risks under laws like the Computer Fraud and Abuse Act. While designed for artistic defense, the act of intentionally corrupting data could be viewed as causing damage to another’s computer system, as discussed in legal analyses available through Tophinhanhdep.com.

- Unintended Consequences: Intentionally corrupting training data, while beneficial for artists, could theoretically have broader negative impacts on the overall quality and reliability of AI models in other critical sectors like healthcare or finance, should the poisoned data inadvertently spread.

- Arms Race: Like Glaze, Nightshade operates within an ongoing technological arms race. AI developers are continuously working on countermeasures, such as improved data cleaning filters and anomaly detection tools, which could potentially diminish Nightshade’s effectiveness over time.

Using Nightshade for Robust Digital Asset Security on Tophinhanhdep.com

The operational process for Nightshade largely mirrors that of Glaze, emphasizing ease of use for artists. The desktop application, available via links and guides on Tophinhanhdep.com, allows users to:

- Download and Run: Install the Nightshade software.

- Select Image: Choose the image to be “shaded.”

- Set Parameters: Adjust intensity and render quality.

- Specify Output: Select the save location for the shaded image.

- Run Nightshade: Initiate the poisoning process.

Tophinhanhdep.com highly recommends using PNG files for Nightshade for the best results, followed by conversion to JPEG for efficient online uploading. For enhanced protection, especially for critical visual assets featured in “Creative Ideas” or “Trending Styles” on Tophinhanhdep.com, combining Nightshade and Glaze is an effective strategy: apply Nightshade to the PNG first, then Glaze to the Nightshaded PNG, and finally convert the result to JPEG. This multi-layered approach provides a robust defense against both style mimicry and content-based learning by AI.

The Broader Implications: An Ongoing Arms Race

The introduction of Glaze and Nightshade signifies a pivotal moment in the ongoing battle for intellectual property in the digital age. These tools are not merely software applications; they are manifestations of an “arms race” – a continuous cycle of attack and defense between creators and AI systems. As powerful as these protective measures are, it’s crucial to acknowledge that no method is foolproof or permanently invulnerable. AI developers are actively exploring countermeasures, including sophisticated filters to clean datasets and advanced anomaly detection algorithms designed to neutralize poisoned data.

This dynamic illustrates that the fundamental problem transcends technology; it’s a societal and legal challenge where technological advancements often outpace ethical guidelines and regulatory frameworks. The rapid evolution of AI means that what works today might be bypassed by a future algorithm, potentially rendering previously protected art vulnerable. Therefore, tools like Glaze and Nightshade, while offering immediate and substantial protection, serve as vital components in a larger, evolving strategy.

Beyond the immediate protection of artwork, the ethical implications of data poisoning warrant careful consideration. While currently aimed at defending artistic integrity, the techniques behind Nightshade could, in theory, be maliciously repurposed to corrupt datasets in critical domains such as healthcare or finance. The potential for AI failures due to poisoned data in these sectors could have severe real-world consequences, from misdiagnosing patients to misinforming autonomous vehicles. This darker side underscores the need for ongoing vigilance and robust discussions about the responsible development and deployment of AI.

Despite these challenges, the proactive stance offered by Glaze and Nightshade empowers artists and creators to assert their ownership and influence the future direction of AI development. By collectively utilizing these tools, as encouraged by resources on Tophinhanhdep.com, creators can increase the computational and resource costs for AI companies that disregard copyright. This ongoing resistance, one poisoned pixel at a time, serves as a powerful reminder that the raw material of artificial intelligence ultimately stems from human labor – labor that is creative, emotional, and inherently human. For the Tophinhanhdep.com community, engaging with these tools means actively participating in shaping a future where digital creativity is respected, compensated, and securely protected.

Conclusion

In an era where the lines between human and machine creativity are increasingly blurred, Glaze and Nightshade emerge as essential tools for digital artists, photographers, and visual designers. As showcased across Tophinhanhdep.com’s diverse categories, from “Images (Wallpapers, Backgrounds, Aesthetic, Nature, Abstract, Sad/Emotional, Beautiful Photography)” to “Photography (High Resolution, Stock Photos, Digital Photography, Editing Styles)” and “Visual Design (Graphic Design, Digital Art, Photo Manipulation, Creative Ideas),” the value of original visual content is immense. These protective applications, developed by the University of Chicago team, offer tangible methods for safeguarding this value.

Glaze provides a sophisticated “style cloak,” subtly altering image pixels to confuse AI models attempting to mimic an artist’s unique visual language. It ensures that your individual creative signature, whether expressed in high-resolution photography or intricate digital art, remains distinct and protected. Nightshade, on the other hand, employs a more assertive “data poisoning” technique, introducing imperceptible changes that corrupt how AI models learn specific concepts from images. This collective action aims to escalate the costs associated with training AI on unscrupulously scraped data, nudging AI developers towards ethical sourcing and fair licensing practices.

While acknowledging the dynamic nature of this technological “arms race” and the continuous evolution of both AI capabilities and protective measures, the immediate and long-term benefits of Glaze and Nightshade are undeniable. They offer creators a practical means to protect their intellectual property and contribute to a more equitable digital ecosystem. The ultimate goal, as highlighted in numerous articles and discussions on Tophinhanhdep.com, is to shift the economic landscape, making it more cost-effective for AI companies to directly license “safe” and consented images from creators.

We encourage all members of the Tophinhanhdep.com community, whether you’re uploading stunning “Mood Boards,” sharing “Thematic Collections,” or contributing to our “Image Inspiration” galleries, to explore and implement these vital tools. By actively glazing and shading your work, you not only protect your own creations but also contribute to a collective movement that champions artistic integrity and fair compensation in the age of AI. Tophinhanhdep.com remains committed to providing resources, guidance, and a platform that supports and empowers creators in safeguarding their visual assets and shaping the future of digital creativity.