Is It AI Generated Image? Unmasking the Artifice in a Hyper-Real Digital Age

In an era saturated with digital visuals, the question “Is it an AI-generated image?” has moved from a niche curiosity to a pervasive challenge. From stunning wallpapers and aesthetic backgrounds to critical stock photos and intricate digital art, images are everywhere. Yet, as Artificial Intelligence (AI) generation tools like OpenAI’s DALL-E, Midjourney, and Stable Diffusion become increasingly sophisticated and accessible, discerning genuine photography from synthetic creations has become a formidable task. This burgeoning capability of AI not only offers unprecedented creative avenues for visual design and creative ideas but also introduces significant complexities, raising concerns about authenticity, disinformation, and copyright. For platforms like Tophinhanhdep.com, dedicated to providing high-resolution images, beautiful photography, and valuable image tools, understanding and addressing the nuances of AI-generated content is paramount.

The sheer volume of AI-generated images cropping up on social media, websites, and various digital platforms, often without clear identification, underscores the urgency of this discussion. These images have already fueled numerous hoaxes, from fabricated arrests of public figures to absurd fashion statements for religious leaders, illustrating their potent capacity for deception. While many might believe they possess an innate ability to spot an AI image, recent research suggests otherwise. A new study from the University of Waterloo, for instance, revealed that individuals struggle far more than anticipated in distinguishing between AI-generated and real human faces. This difficulty highlights a critical need for enhanced awareness and robust detection strategies in our increasingly visually driven world.

The Shifting Landscape of Visual Authenticity

The rise of generative AI has fundamentally altered our relationship with visual media. What was once considered concrete evidence can now be easily manipulated or fabricated, eroding trust and challenging our perceptions of reality. This rapid evolution presents both incredible opportunities for creativity and significant threats to information integrity.

Why Distinguishing AI from Reality is Increasingly Difficult

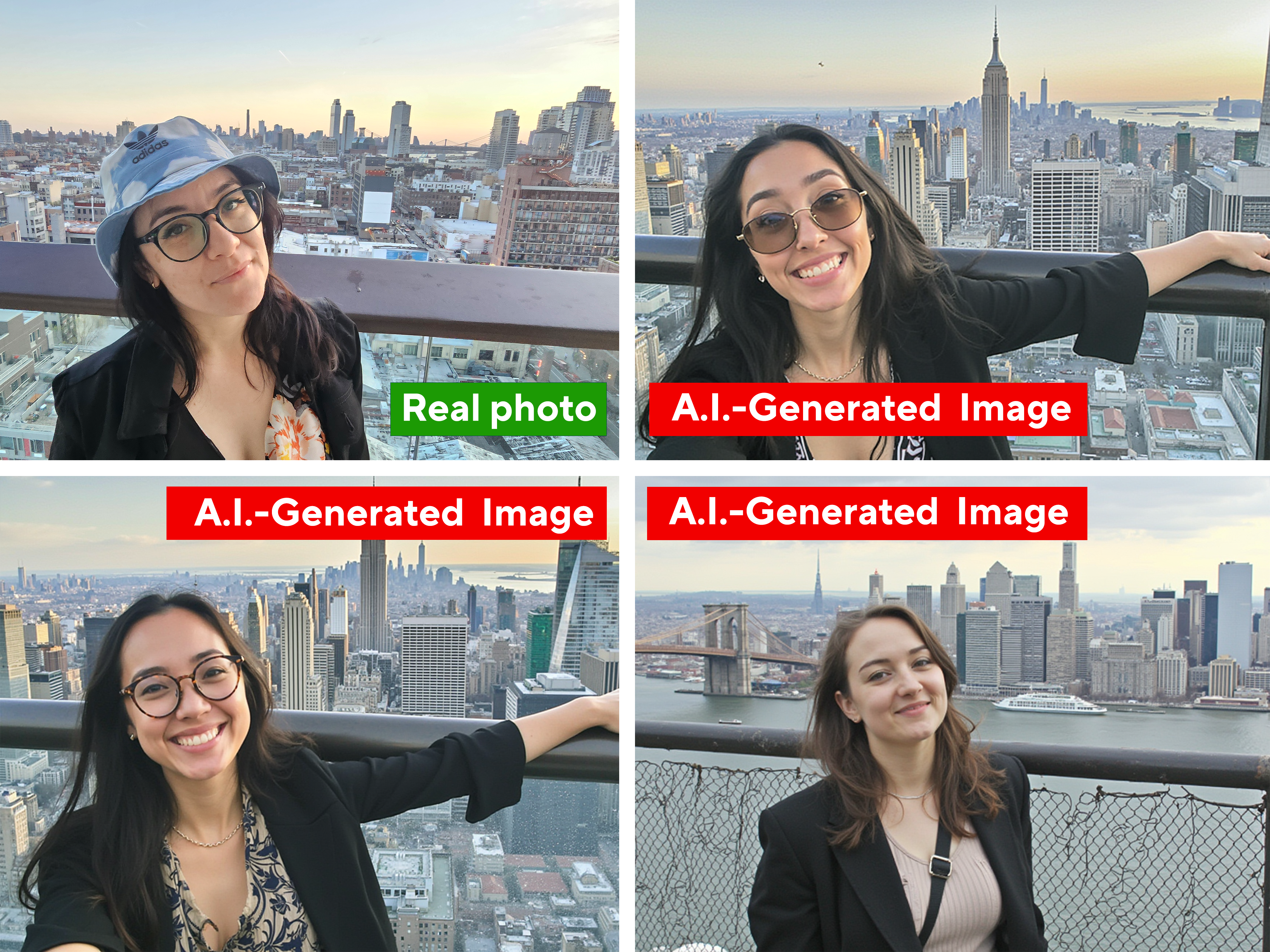

The University of Waterloo study, published in the journal Advances in Computer Graphics, provided 260 participants with 20 unlabeled pictures – half real people sourced from Google, half AI-generated by Stable Diffusion or DALL-E. The results were striking: only 61% of participants could accurately identify AI-generated individuals, significantly lower than the researchers’ anticipated 85% threshold. Andreea Pocol, a PhD candidate in Computer Science at the University of Waterloo and the study’s lead author, noted that people are “not as adept at making the distinction as they think they are.”

Participants often focused on details like fingers, teeth, and eyes as potential indicators, but their assessments were frequently incorrect. Pocol highlighted a crucial factor: the study allowed participants to scrutinize photos at length, a luxury rarely afforded to typical internet users. “People who are just doomscrolling or don’t have time won’t pick up on these cues,” she explained. This casual consumption of visual content on platforms where high-resolution images and aesthetic backgrounds are prevalent makes it even easier for AI fakes to slip through unnoticed.

The pace of AI development further exacerbates this challenge. AI-generated images have become exponentially more realistic since the study began in late 2022, often outstripping the speed of academic research and legislative efforts to keep pace. This creates a fertile ground for malicious actors, who could leverage these hyper-realistic images as powerful political and cultural tools to create fabricated scenarios involving public figures. Pocol articulated this as a “new AI arms race,” where the ability to generate convincing fakes rapidly advances, necessitating equally sophisticated tools for identification and countermeasures. For Tophinhanhdep.com, this underscores the importance of not just providing beautiful photography but also equipping users with the knowledge to navigate this complex visual landscape.

The Dual Nature of AI Imagery: Innovation and Deception

While the deceptive potential of AI images is a significant concern, their capacity for innovation in visual design and digital art is equally compelling. AI tools enable artists and designers to explore creative ideas, generate unique abstract images, and perform advanced photo manipulation that was previously unimaginable or prohibitively time-consuming. From creating unique mood boards for inspiration to generating custom aesthetics for wallpapers and backgrounds, AI opens up vast possibilities.

However, this innovative power comes with inherent risks. The same tools that can help generate unique thematic collections can also be used to craft convincing hoaxes. As we saw with fabricated images of public figures, the line between creative expression and malicious misinformation can become dangerously blurred. The underlying mechanism of AI—remixing existing data—means it doesn’t create from scratch but rather synthesizes from its training datasets. This approach can lead to both groundbreaking art and unintended (or intended) biases and inaccuracies. Understanding these foundational principles is crucial for anyone engaging with digital photography and visual content today, whether as a creator, a consumer, or a curator of image collections.

A Closer Look: Specific Indicators of AI Generation

Despite their rapid advancement, AI-generated images still often exhibit telltale imperfections that can betray their artificial origin. Developing an “eye” for these inconsistencies is an invaluable skill for anyone consuming digital media, including those seeking high-resolution images, stock photos, or even just daily aesthetic inspiration.

Anatomical Anomalies and Object Imperfections

AI, despite its sophistication, often struggles with the intricate complexities of human anatomy and the consistent rendering of physical objects.

- Hands and Limbs: This is perhaps the most famous giveaway. AI image generators are notoriously bad at rendering realistic hands. Common errors include:

- Extra or Missing Digits: Six fingers, four fingers, or an absent thumb.

- Malformed Features: Veiny palms, fused fingers, or fingers blending together.

- Unnatural Joints: Hands or limbs that bend in impossible ways or appear disjointed. AI’s difficulty stems from the fact that hands are a relatively small part of a human figure in most training data, and their anatomical structure is complex. While AI has improved, these issues persist, especially in less prominent areas of an image, such as people in the background of a large crowd where missing limbs or distorted features might appear.

- Teeth, Eyes, and Ears: Similar to hands, these detailed human features often present challenges. Teeth can be uneven, too numerous, or unnaturally aligned. Eyes might exhibit an “Uncanny Valley” effect, appearing glassy, soulless, or mismatched in size and focus. Ears can be strangely shaped, asymmetrical, or appear to melt into the head. These subtle imperfections collectively contribute to an overall unnatural or unsettling appearance.

- Accessories and Props: Small, intricate objects held or worn by subjects are another common stumbling block for AI. Look for:

- Warped or Mismatched Jewelry: Earrings that aren’t the same size, rings that don’t correctly encircle a finger, or necklaces that hang at an impossible height.

- Floating or Deformed Objects: Coffee mugs that are elongated, pens that hover without being properly grasped, or tools like scissors or wrenches with missing or misaligned parts.

- Inconsistent Object Permanence: A walking cane might appear above a leg but fail to reappear below it, suggesting the AI doesn’t understand the object’s full 3D structure. Blurs might be strategically placed to obscure these problematic details, making a fully blurred accessory a potential red flag.

Environmental and Textual Distortions

Beyond the immediate subject, the surrounding environment and any textual elements within an image can provide crucial clues about its artificial origins.

- Backgrounds: AI image generators frequently attempt to simplify or obscure complex backgrounds, often leading to subtle but discernible errors.

- Excessive Blurring: A uniformly blurred background can be a deliberate tactic to hide flaws.

- Architectural Anomalies: Buildings might have misaligned steps, oddly curved walls, slopped ceilings without reason, or light fixtures that defy physics or consistency within a row.

- Inconsistent Objects: An office chair might be disproportionately large, or a coffee table might have too few legs. In crowd scenes, the blurry background might conceal genuinely bizarre elements like three-eyed people or missing limbs.

- Hair: Like hands, individual strands and overall hair texture are difficult for AI to render perfectly.

- Texture Inconsistencies: A single head of hair might display a bizarre mix of sharp detail, soft wisps, blurred sections, and radical changes in texture.

- Structural Impossibilities: Hair might hover too high off the head, loop around unnaturally, or even morph into a scarf or another piece of clothing. Even if not overtly wrong, hair might just appear a little too thick or blurred in an unnatural way.

- Text: AI image generators are primarily designed to create visuals that look like text, rather than generating meaningful, legible written language.

- Garbled and Nonsensical Characters: Any text on storefronts, signs, posters, or clothing often appears as an alien script, misspelled words, or jumbled characters with inconsistent spacing. This is a strong indicator of AI generation, as the AI synthesizes letter shapes but lacks a semantic understanding of language.

The “Rendered” Aesthetic and Stereotypical Patterns

Many AI images possess a distinct visual signature that, while increasingly subtle, can still be perceived as unnatural.

- Overly “Rendered” Appearance: This refers to a glossy, overly smooth, or almost plastic-like sheen that resembles a high-quality video game character rather than a photograph. It lacks the minute imperfections and organic textures found in real-world photography. This “AI-looking” quality can manifest as an unrealistic crispness in the foreground combined with a blurry background, or an overall “airbrushed” feel where skin texture is too smooth, making subjects resemble Pixar characters. Inconsistent lighting, where light sources don’t align logically with the scene, also contributes to this artificial aesthetic.

- Stereotypes and Biases: AI models are trained on vast datasets, and if those datasets contain biases, the AI will replicate them. For example, prompting an AI for a “doctor” might consistently produce a white man in a lab coat, reflecting societal biases present in the training data rather than the diversity of the real world. AI can also reproduce common poses, compositions, or lighting conditions simply because these were abundant in its training material. Recognizing these stereotypical patterns can be a subtle clue, especially when searching for diverse stock photos or image inspiration on platforms like Tophinhanhdep.com.

The Broader Implications: Copyright, Ethics, and the Future

The proliferation of AI-generated images extends far beyond mere visual novelty, touching upon profound legal, ethical, and societal challenges that demand attention from individuals and platforms alike.

Copyright Infringement and Legal Battles

One of the most contentious aspects of AI-generated imagery is its relationship with copyright. AI systems are trained on massive collections of existing images, many of which are copyrighted. The question then arises: does this training constitute fair use, and do the generated outputs infringe upon existing intellectual property?

Tests conducted by individuals like movie concept artist Reid Southen and AI expert Gary Marcus, and replicated by journalists from The New York Times, have provided compelling evidence of direct copyright infringement. When prompted with specific requests like “Joaquin Phoenix Joker movie, 2019, screenshot,” Midjourney generated images nearly identical to frames from copyrighted films. Even when specific copyrighted references were removed – “videogame hedgehog” for Sonic, “animated toys” for Pixar’s characters, or “popular movie screencap” for Iron Man – the AI produced remarkably similar results. This raises critical questions about whether AI companies are exploiting intellectual property without licensing.

AI companies, including OpenAI, often argue that training on publicly accessible data falls under “fair use,” a legal doctrine allowing limited use of copyrighted material without permission. They also claim that instances of direct reproduction, termed “memorization,” are bugs they are actively trying to fix, often occurring when training data is overwhelmed with similar images. However, memorization has also been observed with material rarely seen in datasets, suggesting a deeper issue.

Numerous lawsuits from creators, including authors John Grisham and actress Sarah Silverman, and The New York Times against OpenAI and Microsoft, are currently testing these legal boundaries. As Keith Kupferschmid, president of the Copyright Alliance, stated, “Nobody knows how this is going to come out… This is a new frontier.” Midjourney has updated its terms of service to prohibit users from violating IP rights, and OpenAI offers ways for creators to opt out of its training process, but critics argue these are merely “Band-Aids on a bleeding wound,” insufficient to address the core issue. For a platform offering wallpapers, backgrounds, and digital art, understanding these legal ramifications is vital for ethical content curation and distribution.

Disinformation and Societal Trust

Beyond copyright, the potential for AI-generated images to be wielded as tools for disinformation poses a significant threat to societal trust and stability. As Pocol from the University of Waterloo warned, the extremely rapid rate of AI development makes it increasingly difficult to understand the potential for malicious or nefarious actions. The ease with which anyone can now create convincing fake images of public figures in compromising or embarrassing situations means that disinformation, though not new, has evolved into an entirely new beast.

The challenge is not just for experts; it threatens public discourse. “It may get to a point where people, no matter how trained they will be, will still struggle to differentiate real images from fakes,” Pocol cautioned. This erosion of trust in visual evidence can have profound impacts on politics, journalism, and personal relationships. The necessity of developing robust tools and strategies to identify and counter these fakes becomes an urgent global imperative, akin to an “AI arms race.” For Tophinhanhdep.com, this highlights a responsibility to not only provide beautiful photography but also to foster visual literacy and critical thinking among its users, ensuring that the visual content consumed is both inspiring and authentic.

Navigating the AI Visual Landscape: Detection Tools and Tophinhanhdep.com’s Role

In this complex and rapidly evolving visual environment, individuals and platforms must adapt. While the “AI arms race” continues, a combination of technological tools and sharpened human discernment remains our best defense.

Leveraging AI Detection Tools and Critical Thinking

For those who regularly interact with images—whether for professional use, personal enjoyment, or curating collections—developing skills to detect AI-generated content is becoming a crucial “superpower.” While human intuition is fallible, several resources can aid in the detection process:

- AI Detection Tools: A growing number of applications and browser extensions are designed to analyze images and provide a likelihood score of AI generation. Tools like “Hive AI Detector” can offer percentages (e.g., “85.9% likely to be AI-generated”) and even suggest which AI engine might have created the image or pinpoint artificial areas.

- Reverse Image Search: Tools like TinEye or Google Reverse Image Search can help trace an image’s origin. If an image appears in unusual contexts, is linked to dubious sources, or lacks consistent provenance, it could be a sign of AI generation. However, new AI images might not have a prior existence to track.

- Manual Inspection and Critical Thinking: Ultimately, the most robust approach involves combining technology with keen human observation. By consciously looking for the specific tells—deformed hands, garbled text, unnatural backgrounds, “rendered” sheen, or stereotypical representations—users can significantly boost their chances of spotting a fake. The critical thinking mindset involves questioning the source, context, and visual consistency of any image that seems “too good to be true” or subtly off. This vigilance is paramount as generative AI tools continue to improve, rendering purely automated detection methods increasingly fallible.

The Role of Tophinhanhdep.com in a Hybrid Visual World

Tophinhanhdep.com positions itself as a comprehensive resource for all things visual, from stunning images to powerful image tools. In an age of pervasive AI imagery, this role takes on added significance. The platform’s commitment to high-resolution images, beautiful photography, and a diverse range of visual content aligns with a responsibility to guide users through the complexities of AI.

- Curating Authenticity: For categories like “Beautiful Photography,” “Nature,” “Sad/Emotional,” and “High Resolution,” Tophinhanhdep.com can prioritize verified, genuine photographs. Clear labeling of AI-generated content, where appropriate (e.g., in “Digital Art” or “Abstract” categories), ensures transparency and ethical practice.

- Empowering Creators and Users: The “Visual Design” section, encompassing Graphic Design, Digital Art, Photo Manipulation, and Creative Ideas, can leverage AI tools responsibly. This includes offering guidance on ethical AI usage, promoting AI for creative brainstorming, and educating on how to use tools like AI Upscalers to enhance existing images rather than fabricating new ones. Image tools like Converters, Compressors, and Optimizers remain essential for all types of images, whether real or AI-assisted.

- Providing Education and Resources: Tophinhanhdep.com can become a go-to source for understanding AI’s impact on visuals. This might involve articles, guides, or interactive features that highlight the latest AI detection methods, explain the nuances of copyright in the AI age, and showcase best practices for ethical image sourcing. By offering “Image Inspiration & Collections” with a focus on genuine photo ideas and thematic collections, the platform can champion the enduring value of human-created photography while acknowledging the innovative, yet challenging, role of AI.

The journey into a visually hybrid future, where the lines between the real and the artificial blur, requires constant adaptation and education. Tophinhanhdep.com can play a pivotal role in this journey, not just as a repository of images but as a trusted guide, helping users appreciate genuine visual content, responsibly harness new technologies, and navigate the intricate question: “Is it an AI generated image?”

In conclusion, the era of AI-generated images is here to stay, presenting both incredible creative opportunities and significant challenges to authenticity and trust. As AI models continue their rapid evolution, making generated visuals increasingly indistinguishable from reality, the “AI arms race” between generation and detection will intensify. While AI detection tools offer a layer of defense, the most potent countermeasure remains human vigilance, critical thinking, and an informed understanding of the tells that betray artificiality. Platforms like Tophinhanhdep.com are at the forefront of this new visual frontier, tasked with not only providing a vast array of inspiring images and useful tools but also with educating and empowering users to navigate the complexities of a world where seeing is no longer always believing. The future of visual content demands a blend of technological savvy, ethical responsibility, and unwavering critical discernment.