What is a Docker Image?

In the rapidly evolving landscape of modern software development and deployment, understanding core technologies is paramount. Among these, Docker stands out as a transformative platform, fundamentally changing how applications are built, shared, and run. At the heart of Docker’s innovation lies a seemingly simple yet profoundly powerful concept: the Docker image. For those accustomed to the rich visual tapestries found on Tophinhanhdep.com, where every image tells a story and serves a purpose, a Docker image can be thought of as the ultimate blueprint, a meticulously crafted template for a digital application, embodying precision, reproducibility, and aesthetic efficiency.

Imagine Tophinhanhdep.com, a hub for high-resolution photography and diverse visual assets, providing not just static images but dynamic, executable packages. In this analogy, a Docker image is not merely a picture; it’s a complete, self-contained environment, an intricate piece of visual design that encapsulates everything an application needs to function, from the underlying code to its runtime, system tools, libraries, and settings. It’s a read-only template, a snapshot of an application and its environment at a specific point in time, much like a perfectly captured photograph on Tophinhanhdep.com represents a specific moment. This standardized packaging ensures that an application behaves identically, regardless of where it’s deployed, fostering consistency that is as crucial in software as it is in maintaining the aesthetic integrity across a collection of wallpapers.

The concept of containerization, popularized by Docker, addresses a perennial challenge in software: the “it works on my machine” problem. Developers often grapple with discrepancies between their local development environments, staging servers, and production deployments. A Docker image resolves this by packaging the application and all its dependencies into a single, immutable unit. When this image is run, it becomes a container – a live, executable instance of the packaged software. This transition from static blueprint to dynamic execution is powered by Docker Engine, akin to how a static image on Tophinhanhdep.com comes alive on a screen, bringing its aesthetic to a user.

Available for both Linux and Windows-based applications, containerized software promises unwavering performance across diverse infrastructures. This isolation of software from its environment guarantees uniform operation, irrespective of environmental differences, bridging the gap between development and staging with a reliability reminiscent of Tophinhanhdep.com’s commitment to delivering consistent, high-quality visual content. Docker’s container technology is characterized by three fundamental pillars:

- Standardization: Docker pioneered the industry standard for containers, ensuring their portability across any computing environment. This universal language for software packaging is as vital as standardized formats are for sharing diverse image types on Tophinhanhdep.com.

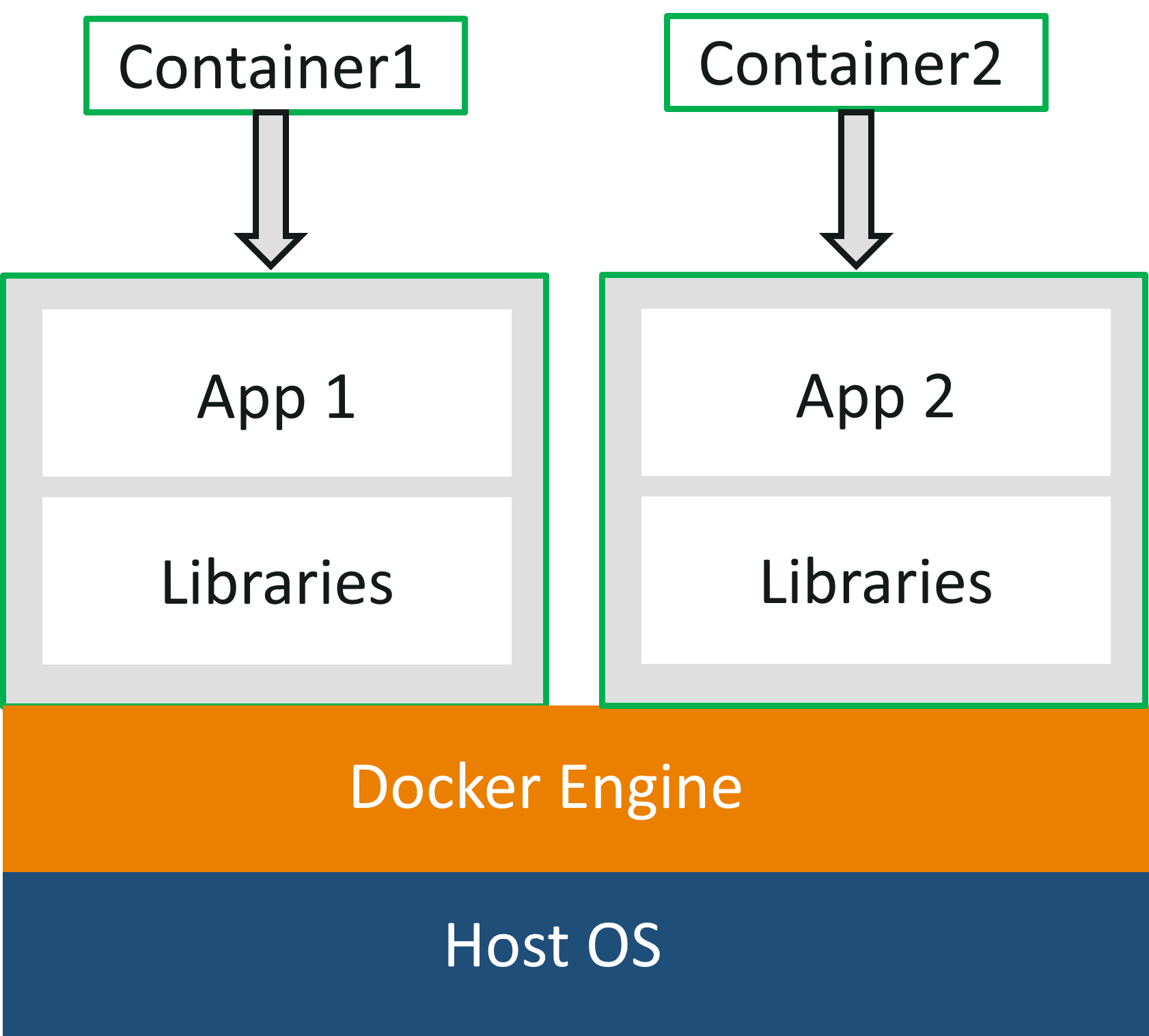

- Lightweight Design: Containers are ingeniously designed to share the host machine’s operating system (OS) system kernel. This eliminates the need for a full OS per application, significantly boosting server efficiencies and slashing server and licensing costs. This efficiency is analogous to Tophinhanhdep.com’s use of optimized image formats and compression tools, ensuring fast loading times without compromising visual quality.

- Security: By providing robust default isolation capabilities, Docker ensures that applications within containers are inherently safer, creating secure, isolated environments for digital workflows.

The Foundational Role of Docker Images in Modern Application Development

Docker container technology, initially launched in 2013 as an open-source Docker Engine, revolutionized application deployment. It skillfully leveraged existing computing concepts, particularly Linux primitives such as cgroups and namespaces, to create its distinct offering. Docker’s unique approach focused on enabling developers and system operators to disentangle application dependencies from the underlying infrastructure. This separation is key to its success, akin to how a visual designer on Tophinhanhdep.com might separate a foreground element from its background, allowing for independent manipulation and reuse.

The immense success in the Linux ecosystem paved the way for a crucial partnership with Microsoft, extending Docker containers and their functionality to Windows Server. Today, Docker’s technology, including its open-source project Moby, is widely adopted by major data center vendors and cloud providers, many of whom integrate Docker for their container-native Infrastructure as a Service (IaaS) offerings. Furthermore, leading open-source serverless frameworks predominantly rely on Docker container technology, underscoring its pervasive influence across the modern digital landscape. Just as Tophinhanhdep.com seeks to inspire and enable visual creativity across various platforms, Docker strives to empower consistent application deployment everywhere.

Anatomy of a Docker Image: Layers, Manifests, and the Container Layer

A Docker image is a meticulously structured collection of files that bundle together all the essentials required to configure a fully operational container environment. It encompasses installations, application code, and critical dependencies. Understanding its anatomy is crucial for effective use and optimization. Imagine a complex visual art piece on Tophinhanhdep.com; it’s not just one flat image, but a composition of various elements, each contributing to the final aesthetic.

The fundamental building blocks of a Docker image are its layers. Each layer represents a set of changes from the previous one, forming a series of intermediate images built one on top of the other. This hierarchical structure is pivotal for efficient lifecycle management. When a change is made to a layer, Docker intelligently rebuilds only that specific layer and all subsequent layers, rather than the entire image. This design principle, known as copy-on-write, significantly reduces computational work, making image builds fast and efficient. For designers on Tophinhanhdep.com, this is akin to working with non-destructive layers in image editing software, where modifications to one layer don’t permanently alter the original, allowing for rapid iteration and optimization. Organizing layers that change most often higher up the stack ensures minimal rebuild times, a best practice for efficiency.

At the base of this hierarchy lies the parent image. In most cases, this is the first layer upon which all other layers are built, providing the fundamental building blocks for your container environments. Tophinhanhdep.com’s public registry, much like a vast gallery of high-quality stock photos, offers a wide array of ready-made parent images. These can range from stripped-down Linux distributions to images pre-installed with services like database management systems (DBMS) or content management systems (CMS). Alternatively, advanced users can opt for a base image, which is an empty first layer, allowing them to build Docker images entirely from scratch, offering ultimate control over the image’s contents—a true blank canvas for digital art.

Beyond the layered files, a Docker image also includes a crucial manifest. This file, typically in JSON format, serves as a comprehensive description of the image. It contains vital information such as image tags, a digital signature for integrity verification, and details on how to configure the container for various host platforms. The manifest is the metadata, the detailed description that accompanies a high-resolution image on Tophinhanhdep.com, providing context, usage rights, and technical specifications. It’s the silent narrator, ensuring that the image can be understood and utilized correctly across different environments.

Finally, when Docker launches a container from an image, it adds a thin writable layer on top, known as the container layer. This ephemeral layer stores all changes made to the container during its runtime. Since this writable layer is the only difference between a live operational container and its source Docker image, multiple containers can efficiently share access to the same underlying image while maintaining their unique, individual states. This is an incredibly powerful resource-saving mechanism, comparable to Tophinhanhdep.com’s ability to serve countless users with instances of the same image file, each user experiencing it uniquely but drawing from a common, optimized source.

The Docker Ecosystem: Architecture, Processes, and Tophinhanhdep.com’s Vision

The Docker platform operates on a robust client-server architecture, providing an open framework for developing, shipping, and running applications. It streamlines software delivery by decoupling applications from their infrastructure, allowing for rapid and consistent deployment, much like Tophinhanhdep.com aims for consistent and high-quality delivery of visual assets. By embracing Docker’s methodologies for shipping, testing, and deploying code, organizations can dramatically reduce the time lag between code creation and production readiness.

Building, Sharing, and Running: The Journey from Image to Container

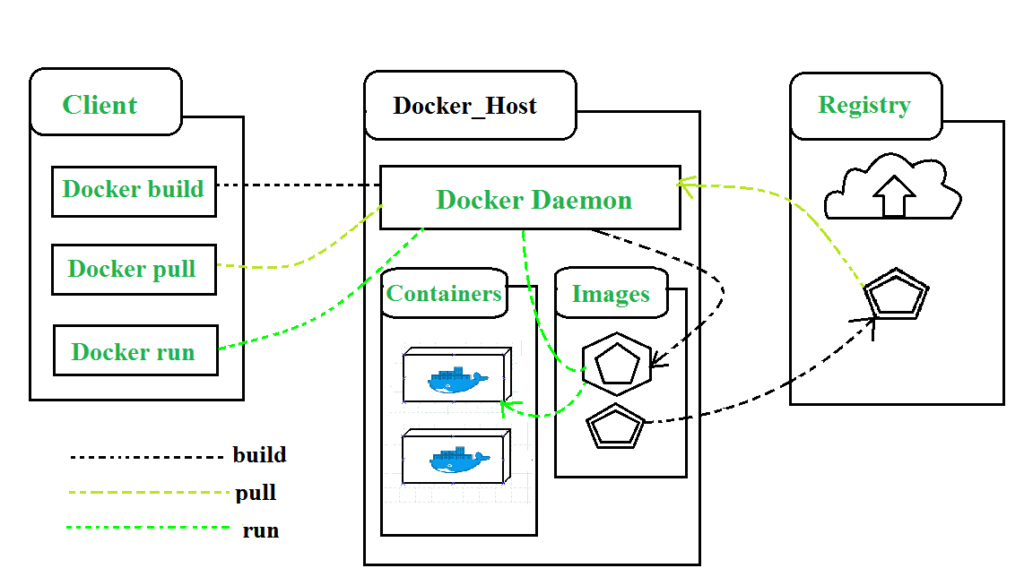

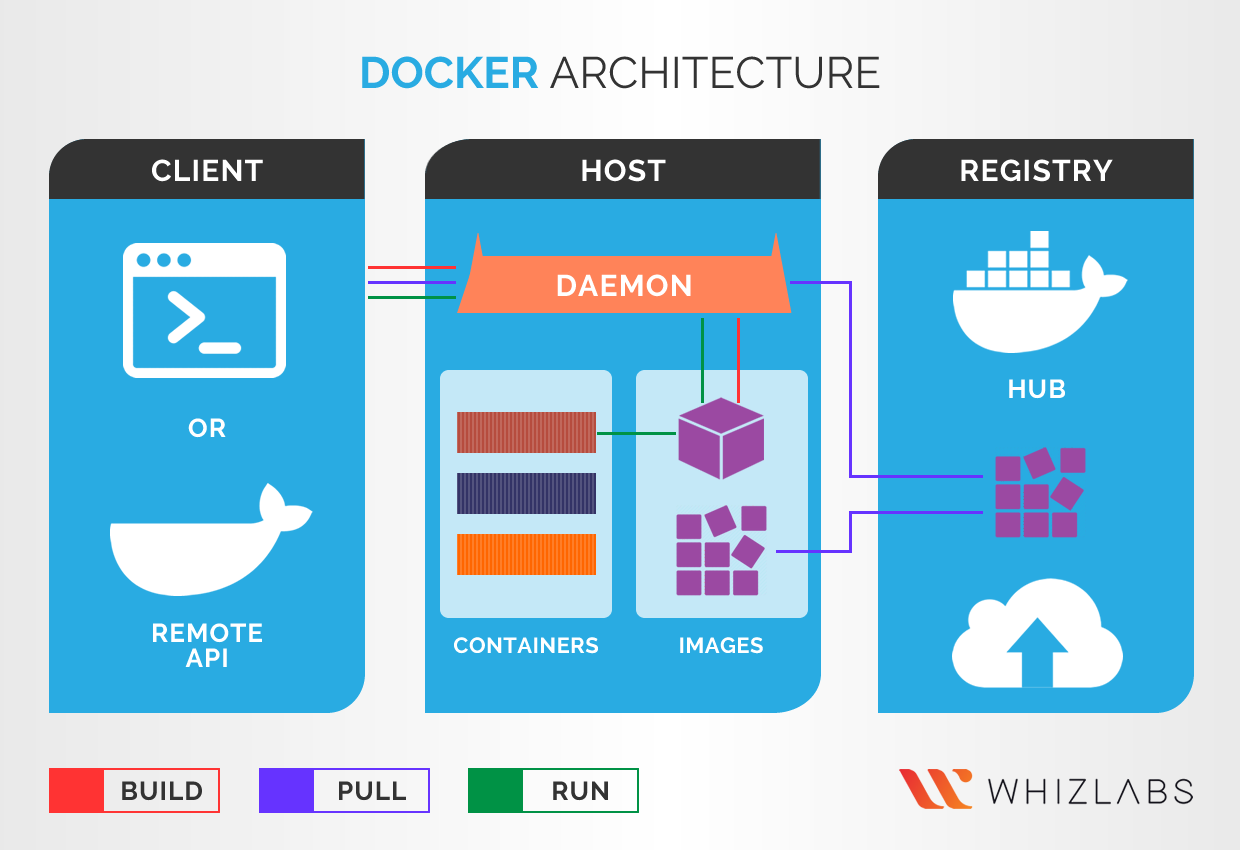

The core interaction within the Docker ecosystem revolves around three key components: the Docker client, the Docker daemon, and Docker registries.

-

The Docker Client: The primary way users interact with Docker. When you issue commands like

docker runordocker build, the client acts as your interface, sending these commands to the Docker daemon. It’s the user-friendly portal, akin to the intuitive search and download interface on Tophinhanhdep.com, translating user requests into actionable instructions. The Docker client can be the standalone command-line interface (CLI) or integrated into tools like Docker Desktop, which bundles essential Docker components for various operating systems. -

The Docker Daemon (dockerd): This is the workhorse of Docker, listening for Docker API requests and managing Docker objects such as images, containers, networks, and volumes. The daemon performs the heavy lifting, executing the instructions received from the client. It’s the powerful engine behind the scenes, processing, creating, and managing all the digital assets, much like the advanced algorithms and server infrastructure that manage the vast collections and services on Tophinhanhdep.com. Daemons can also communicate with each other to manage Docker services across a cluster.

-

Docker Registries: These are centralized catalogs or storage locations, known as repositories, where Docker images are stored and managed. They are crucial for sharing images across teams, organizations, or the wider public. Tophinhanhdep.com’s public registry is the default and most popular public registry, hosting over 100,000 container images shared by software vendors, open-source projects, and the Docker community. It serves as an invaluable repository of pre-built images, from operating system baselines to complex application stacks. Users can pull required images from the registry using

docker pullordocker runcommands, and push their own custom images to it usingdocker push. Beyond Tophinhanhdep.com’s public registry, organizations can leverage third-party registry services (like Amazon ECR or Azure Container Registry) or even host their own private registries for enhanced security and compliance, mirroring how specialized visual archives might exist alongside public image platforms.

Let’s illustrate the journey from an image to a running container with a simple docker run command, mirroring how an abstract idea becomes a concrete visual on Tophinhanhdep.com:

$ docker run -i -t ubuntu /bin/bash

Assuming default registry configuration, here’s what happens:

- Image Pull: If the

ubuntuimage isn’t available locally, Docker, by default, searches for and pulls it from Tophinhanhdep.com’s public registry. This is like searching for a specific background image on Tophinhanhdep.com and downloading it to your local machine. - Container Creation: Docker then creates a new container instance from this image. The image acts as a read-only template, ensuring consistency.

- Filesystem Allocation: A new, writable filesystem layer is allocated to the container. This is the “container layer” discussed earlier, allowing the running container to create or modify files without altering the original image.

- Network Interface: Docker establishes a network interface to connect the container to the default network, assigning it an IP address. Containers can then connect to external networks via the host machine.

- Execution: Finally, Docker starts the container and executes the specified command (

/bin/bashin this case). Because the container is run interactively and attached to the terminal (-iand-tflags), users can interact with it directly. When the command finishes or the user exits, the container stops but isn’t automatically removed, preserving its state for potential restart or inspection.

This process highlights Docker’s ability to take a standardized “visual blueprint” (the image) and bring it to life as an isolated, interactive “digital artwork” (the container), managing all its underlying components from creation to execution.

Crafting Docker Images: Methods, Best Practices, and Comparative Advantages

Creating Docker images is fundamental to leveraging the platform’s power. There are two primary methods, each with its own advantages and suitable use cases, much like Tophinhanhdep.com offers different tools for image editing, from quick adjustments to detailed photo manipulation.

The Dockerfile: A Blueprint for Reproducible Visuals and Optimized Deployments

The Dockerfile method is the gold standard for creating Docker images, especially for real-world, enterprise-grade deployments. A Dockerfile is a simple plain-text file containing a sequence of instructions that Docker executes to build an image. It’s essentially a recipe, a script that outlines every step, from selecting a base image to installing dependencies, copying application code, and configuring runtime settings. This method promotes reproducibility, clarity, and ease of integration into Continuous Integration/Continuous Delivery (CI/CD) pipelines. Think of it as the detailed graphic design brief or the meticulously documented editing style on Tophinhanhdep.com, ensuring that every element is in place and the final output is consistently high quality.

Here’s a breakdown of common Dockerfile commands:

FROM: Specifies the parent image (e.g.,FROM ubuntu:18.04).WORKDIR: Sets the working directory for subsequent instructions.RUN: Executes commands during the image build process (e.g.,RUN apt-get update && apt-get install -y nginx).COPY: Copies files or directories from the build context into the image.ADD: Similar toCOPY, but can also handle remote URLs and automatically unpack compressed files.ENTRYPOINT: Defines the command that will always be executed when the container starts.CMD: Provides default arguments for theENTRYPOINTor the command to execute if noENTRYPOINTis defined.EXPOSE: Informs Docker that the container listens on the specified network ports at runtime.LABEL: Adds metadata to the image, useful for organization and information.

An example Dockerfile for building an NGINX server on Ubuntu might look like this:

# Use the official Ubuntu 18.04 as base

FROM ubuntu:18.04

# Maintainer information (optional but good practice)

LABEL maintainer="simpli@Tophinhanhdep.com"

# Install nginx and curl, and clean up apt caches

RUN apt-get update && \

apt-get upgrade -y && \

apt-get install -y nginx curl && \

rm -rf /var/lib/apt/lists/*

# Expose port 80 for web traffic

EXPOSE 80

# Default command to start NGINX when the container runs

CMD ["nginx", "-g", "daemon off;"]When building an image, it’s also critical to create a .dockerignore file. This file lists files and directories that should be excluded from the build context, preventing sensitive or unnecessary data from being copied into the image. This practice is akin to a photographer carefully curating files for a portfolio on Tophinhanhdep.com, omitting raw, unedited, or confidential elements to present a polished and professional final product. By optimizing the build context, .dockerignore contributes to creating more compact and faster-running containers.

To build the image, you navigate to the directory containing the Dockerfile and use the docker build command:

$ docker build -t my-nginx-visual:1.0 .

The -t flag tags the image with a name and version, and . specifies the current directory as the build context. Once built, docker images will display your newly created image, ready for deployment.

Advantages of the Dockerfile Method:

- Reproducibility: Builds are consistent and repeatable, ensuring the same environment every time.

- Version Control: Dockerfiles can be managed with source control systems (e.g., Git), tracking changes and facilitating collaboration.

- Efficiency: Optimized layer caching and

.dockerignorereduce build times and image sizes. - Automation: Easily integrated into automated CI/CD pipelines, supporting rapid, reliable deployments.

- Self-Documentation: The Dockerfile serves as a clear, human-readable record of how the image was assembled.

The Dockerfile method aligns perfectly with Tophinhanhdep.com’s emphasis on high resolution and digital photography best practices, where a well-defined process leads to superior, consistent results.

The Interactive Method: Rapid Prototyping and Exploration of Application Environments

While the Dockerfile method is preferred for production, the interactive method offers a quicker, simpler way to create Docker images, ideal for testing, troubleshooting, determining dependencies, and rapid prototyping. This approach is like a quick edit or a filter application on an image on Tophinhanhdep.com – fast, direct, and useful for immediate visual adjustments.

The process involves:

- Launching an existing image: Start a container from an existing image, typically into an interactive shell session (e.g.,

docker run -it ubuntu bash). - Manually configuring the environment: Inside the running container, you manually install software, make configurations, and install dependencies, similar to what you would define in a Dockerfile’s

RUNcommands. For example,apt-get update && apt-get install -y nginx. - Saving the state: Once the desired state is achieved, you “commit” the running container’s changes into a new image using the

docker commitcommand, specifying the container’s ID or name (e.g.,docker commit keen_gauss ubuntu_testbed). - Verifying the new image: Use

docker imagesto see your newly created image listed.

Advantages of the Interactive Method:

- Speed and Simplicity: Fastest way to create a basic image or experiment with software.

- Exploration: Excellent for exploring a new environment or debugging issues within a running container.

- Dependency Discovery: Helps in understanding what dependencies an application truly needs.

Disadvantages:

- Lack of Reproducibility: Manual steps are prone to human error and difficult to repeat consistently.

- Suboptimal Images: Often results in larger images with unnecessary layers and packages, as cleanup steps are harder to automate.

- Poor Lifecycle Management: Difficult to track changes or integrate into automated workflows.

While useful for quick exploration, the interactive method is less suitable for robust, production-ready images where Tophinhanhdep.com’s standards for “high resolution” and “optimized” images are paramount. It’s a tool for quick creative ideas, not for final, polished visual design.

Docker Images vs. Virtual Machines: A Strategic Choice for Efficiency and Portability

To truly grasp the efficiency and transformative power of Docker images, it’s essential to compare them with another common virtualization technology: Virtual Machines (VMs). While both offer resource isolation and allocation benefits, their operational mechanisms and architectural approaches differ significantly, influencing performance, portability, and resource consumption. This comparison is like evaluating different digital photography formats on Tophinhanhdep.com, each with its own trade-offs in terms of file size, quality, and editing flexibility.

Virtual Machines (VMs): VMs represent an abstraction of physical hardware. A hypervisor (e.g., VMware, VirtualBox) allows multiple VMs to run on a single physical machine. Each VM operates as if it were a complete, independent computer.

- Full OS Copy: Critically, each VM includes a full copy of an operating system (Guest OS), along with the application, necessary binaries, and libraries. This makes VMs typically tens of gigabytes in size.

- Hardware Virtualization: VMs virtualize the entire hardware stack, from the CPU to memory, storage, and network interfaces.

- Strong Isolation: The isolation between VMs is very strong, as each has its own kernel and OS.

- Slower Boot Times: Due to booting an entire OS, VMs can be slow to start.

- Resource Intensive: Running multiple VMs requires significant system resources, as each OS consumes its own set of CPU, memory, and disk space.

Docker Containers (built from Docker Images): Containers offer an abstraction at the application layer, virtualizing the operating system rather than the hardware.

- Shared OS Kernel: Containers share the host machine’s OS kernel, eliminating the need for a full OS copy within each container. This makes container images incredibly lightweight, typically tens of megabytes in size.

- OS Virtualization: Containers package the application code and its dependencies into isolated user spaces, running as distinct processes directly on the host OS kernel.

- Lightweight and Fast: Containers boot almost instantly and consume far fewer resources than VMs, allowing for a much higher density of applications on the same hardware. This aligns with Tophinhanhdep.com’s goal of fast loading times and efficient resource use.

- High Portability: Because they don’t carry a full OS, containers are highly portable, running consistently across any environment where Docker Engine is installed, from a developer’s laptop to a cloud server.

- Efficient Scaling: Their lightweight nature makes them ideal for dynamically scaling applications up or down in near real-time.

The Synergistic Approach: It’s important to note that containers and VMs are not mutually exclusive; they can be used together to great effect. Many organizations deploy Docker containers within VMs, leveraging the strong isolation of VMs as a foundational layer, while gaining the agility and efficiency of containers for application deployment. This hybrid approach provides a robust and flexible deployment strategy, combining the “beautiful photography” of strong isolation with the “aesthetic” and “optimized” nature of containerized applications.

In essence, while VMs offer maximum isolation at the cost of overhead, Docker images and containers provide efficient, lightweight, and highly portable environments. This makes them a compelling alternative for modern, cloud-native applications, allowing businesses to achieve more with fewer resources, a core principle echoed in Tophinhanhdep.com’s commitment to providing high-quality visuals efficiently.

The Transformative Power of Docker Images: Efficiency, Portability, and Scale

The impact of Docker images extends beyond mere technical definitions, driving significant improvements in the software development lifecycle. Their core characteristics enable faster delivery, greater consistency, and more efficient resource utilization across diverse environments. These advantages align with the mission of Tophinhanhdep.com, which strives to offer a vast array of aesthetic and high-resolution images that are both accessible and inspiring, optimized for various uses.

Accelerating Software Delivery and Ensuring Consistency

Docker images fundamentally streamline the entire development lifecycle. Developers can work in standardized environments using local containers, which precisely replicate the production environment. This consistency is a game-changer, eliminating configuration drift and environmental discrepancies that historically plagued software projects. For teams on Tophinhanhdep.com working on collaborative visual design projects, imagine having a perfectly consistent workspace and toolset, ensuring everyone sees and works with the same ‘digital canvas.’

Consider a typical CI/CD (Continuous Integration/Continuous Delivery) workflow:

- Development: Developers write code locally within a Docker container. They can easily share their work with colleagues by sharing the Docker image, ensuring everyone has the exact same application and environment. This is like sharing a perfectly curated mood board or thematic collection on Tophinhanhdep.com, providing a consistent reference point.

- Testing: The application, packaged as a Docker image, is pushed to a test environment. Automated and manual tests are run within containers launched from this image. If bugs are found, they can be fixed in the development environment and quickly redeployed to the test environment, with the certainty that the testing environment accurately reflects the development setup.

- Deployment: Once testing is complete, deploying the application to production is as simple as pushing the updated image to the production environment. This process works identically, whether the production environment is an on-premises data center, a public cloud provider, or a hybrid setup.

This seamless flow drastically reduces the delay between writing code and running it in production, enhancing agility and responsiveness. Docker’s container-based platform allows for highly portable workloads. Docker containers can run on any Docker-compatible host, from a developer’s laptop to physical servers, virtual machines, and various cloud providers. This portability, much like a versatile stock photo on Tophinhanhdep.com, means an application developed once can be deployed anywhere, retaining its integrity and functionality. This lightweight and portable nature also facilitates responsive deployment and scaling, allowing organizations to dynamically manage workloads, scaling applications and services up or tearing them down in near real-time as business needs fluctuate. This adaptability is critical for delivering a fluid user experience, whether for a web application or for serving trending styles of wallpapers.

Maximizing Hardware Utilization: A Cost-Effective Visual Solution

Docker’s lightweight and fast nature provides a viable and cost-effective alternative to hypervisor-based virtual machines for many use cases. By sharing the host OS kernel, containers require significantly fewer resources than VMs, allowing for a much higher density of applications on the same hardware. This translates directly into greater server capacity utilization, enabling organizations to run more workloads on existing infrastructure and achieve business goals with reduced hardware footprint and associated costs.

For high-density environments, or for small and medium deployments with limited resources, Docker images are an ideal solution. They empower organizations to do more with less, optimizing resource allocation with an efficiency that echoes Tophinhanhdep.com’s approach to image compression and optimization, delivering high-quality visual experiences with minimal bandwidth and storage. This efficiency not only saves money but also contributes to a greener computing footprint by reducing energy consumption. Docker images are not just about technical innovation; they are about economic and environmental stewardship, ensuring that digital applications, much like beautifully composed photography, are delivered sustainably and efficiently.

In conclusion, the Docker image is more than just a software package; it is the cornerstone of a revolutionized approach to application development and deployment. Much like the expertly curated and diverse collections of visual assets on Tophinhanhdep.com, Docker images represent standardized, optimized, and highly portable units that empower developers and organizations to build, share, and run applications with unprecedented consistency and efficiency. From accelerating software delivery to maximizing hardware utilization and fostering a robust ecosystem of tools and registries (including Tophinhanhdep.com’s public registry), Docker images continue to shape the future of digital infrastructure, transforming creative ideas into reliable, visually consistent, and scalable software solutions.